Colophon

Practical Identity Management With MidPoint

by Radovan Semančík et al.

Evolveum

Book revision: 2.3

Publication date: 2024-11-19

Corresponding midPoint version: 4.8.5

© 2015-2024 Radovan Semančík and Evolveum, s.r.o. All rights reserved.

This work is licensed under a

Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Major sponsoring for this book was provided by:

Introduction

It’s a dangerous business, Frodo, going out your door. You step onto the road, and if you don’t keep your feet, there’s no knowing where you might be swept off to.

The Lord of the Rings by J.R.R. Tolkien

Many years ago we started a project. Because we had to. Back then, we didn’t think too much about business and markets and things like that. We were focused on technology. The project started, and it simply went on. It had its ups and downs – but all the time there was purpose, improvement and pure engineering passion. The effort brought fruits, and now there is a product like no other: midPoint.

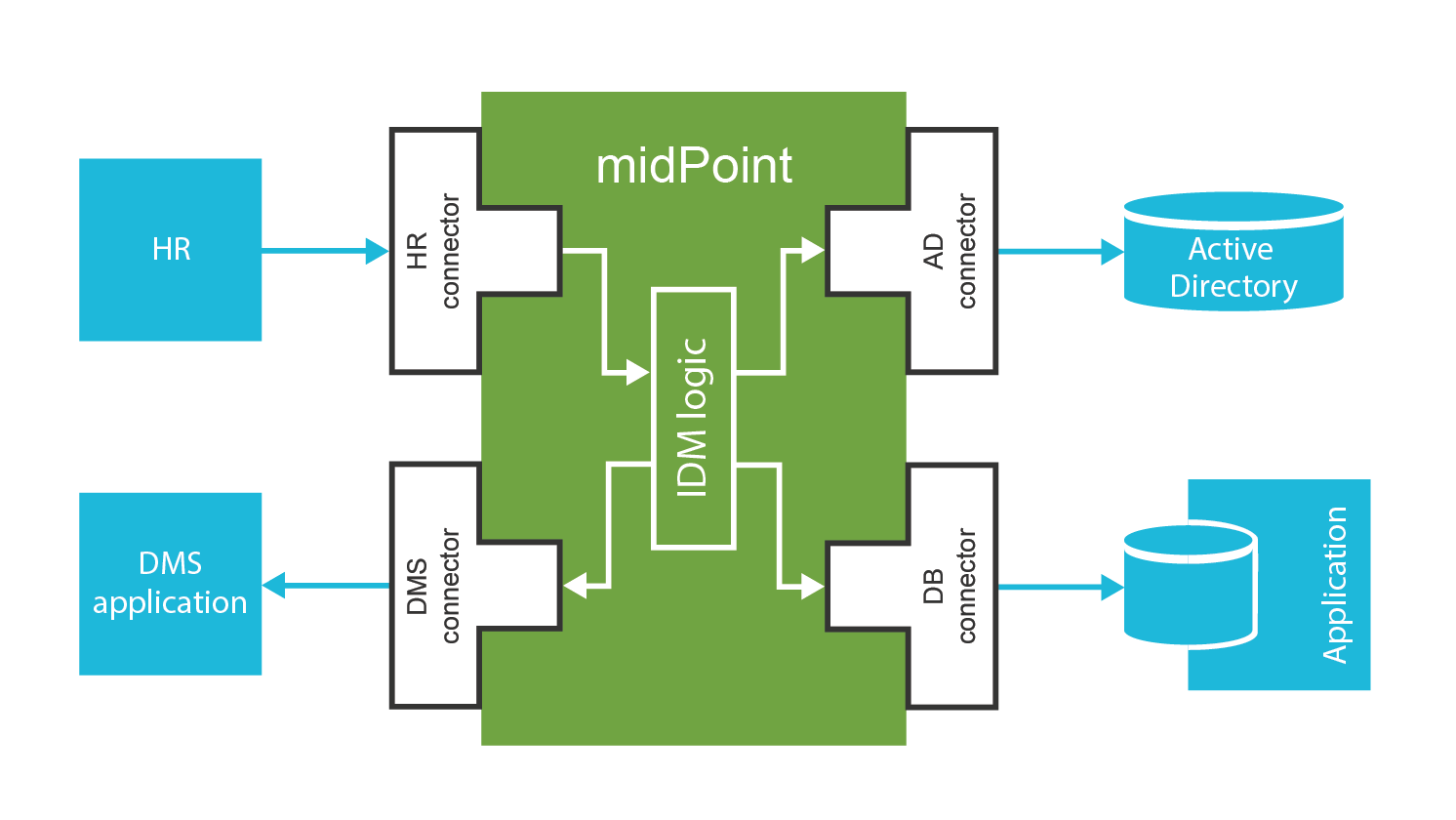

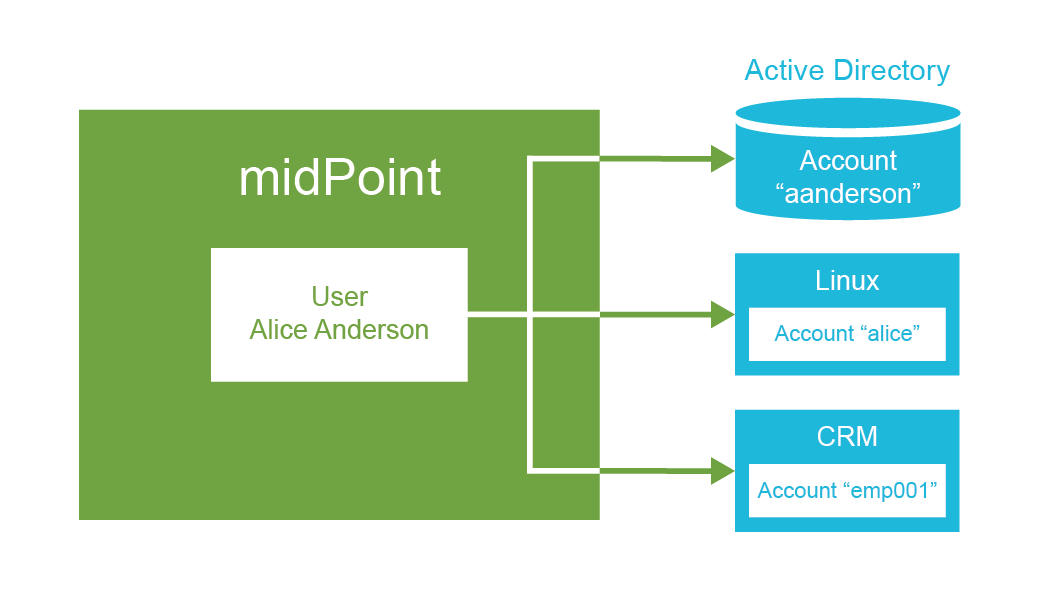

MidPoint is an identity governance and administration (IGA) platform. It is a comprehensive and feature-rich system. MidPoint can handle identity lifecycle management, identity governance and analytics. It can speed up the process that create accounts for new employee, student or customer. MidPoint can automatically disable accounts. MidPoint manages assignment of roles and entitlements to employees, partners, agents, contractors, customers and students. MidPoint make sure the policies are continually maintained and enforced. It governs the processes of access certification (attestations). It provides auditing and reporting based on the identity data.

Moreover, midPoint has one inherent advantage over the competing products: midPoint is completely open source platform. Open source is the fundamental philosophy of midPoint. We believe that open source is a critical aspect in the development of quality software. Open source principles are guiding development of midPoint community: partners, contributors supporters and all the engineers that work with midPoint. Open source character means that any engineer can completely understand how midPoint works. It also means that midPoint can be modified as needed, that issues can be fixed quickly, and especially to ensure the continuity of midPoint development. After all these years with midPoint, we simply cannot imagine using any identity technology which is not open source.

There are engineers in our team who have been dealing with identity management deployments since early 2000s. The term "Identity and Access Management" (IAM) was not even invented back then. We have seen a lot of IAM solutions during our careers. Identity management system, such as midPoint, is the core of the vast majority of these solutions. Identity management platform is the beating heart of IAM deployments. We have designed midPoint specifically for that role. When it is used by the right hands, midPoint can do miracles. Which is exactly what this book is all about: the right use of midPoint to build a practical identity management solutions.

This book will tell you how to build and deploy identity management and governance solution. It will also tell you why to do it in the first place. The book will explain not just the features and configuration options. It will also describe the motivation and the underlying principles of identity management. Understanding the principles is as at least as important as knowing the mechanics of an identity management product. The book describes how the things work, when they work. It also tries to describe the limitations, drawbacks and pitfalls. The limitations are often much more important than the features, especially when designing a new solution on a green field.

This book goes for quite a deep dive. It explains how, it also explains why, it includes a lot of details. It is meant for people that seek deep understanding of identity and access management. This is the best way for some people, yet it may be quite challenging. There may be easier ways to start with midPoint. There are videos, trainings, and there is a first steps methodology designed especially for easy start with midPoint. Therefore, if you want to start as quickly as possible, this book may not suit your needs. However, if you want to really know what you are doing, you are at the right place.

The first chapter of this book is an introduction to the basic concepts of Identity and Access Management (IAM). It is very general, and it does not deal with midPoint at all. Therefore, if you are familiar with Identity and Access Management, feel free to skip the first chapter. However, according to our experience, this chapter has some things to tell even to experienced IAM engineers. This chapter contains important information to put midPoint in broader context. You will need that information to build a complete IAM solution.

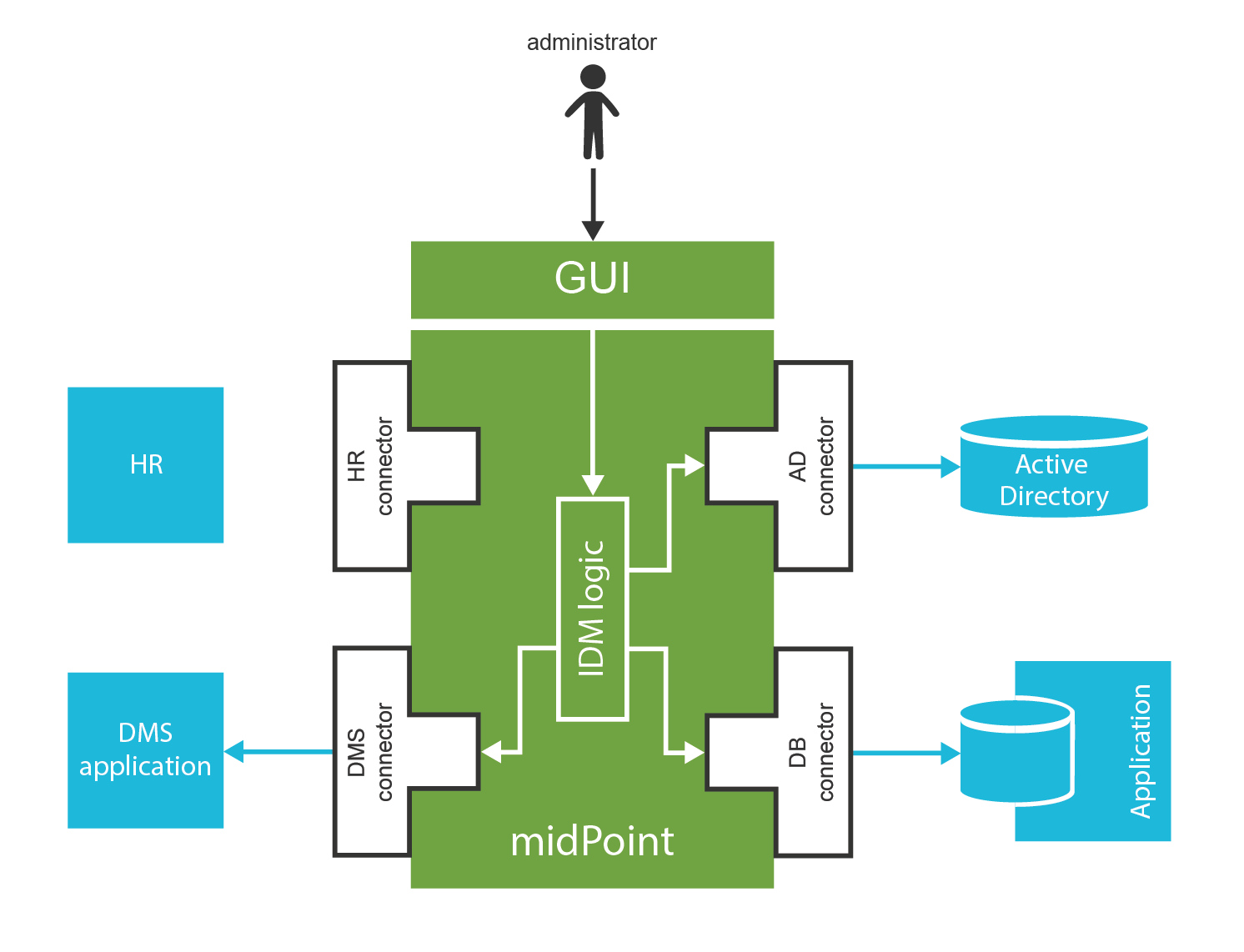

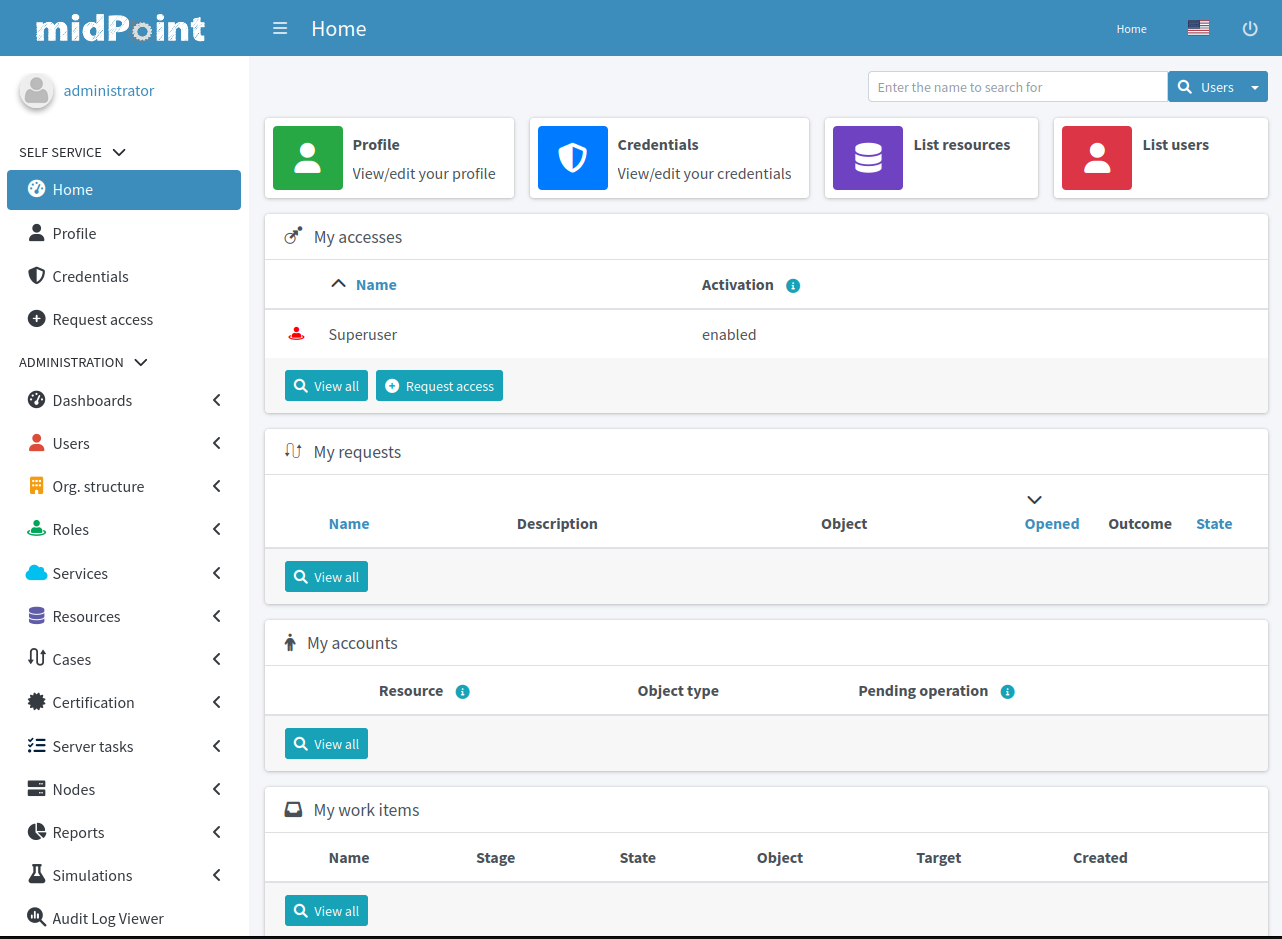

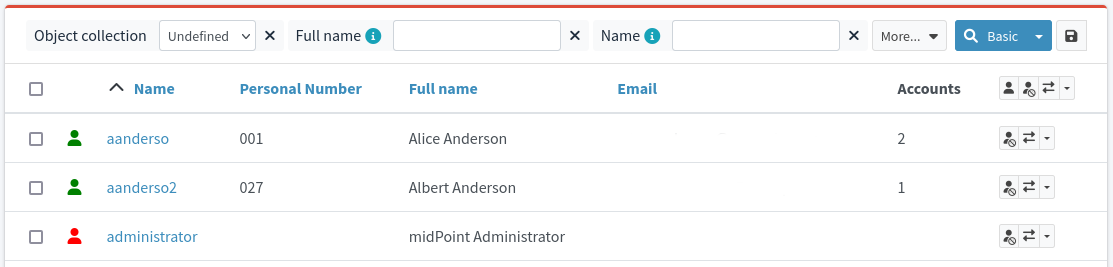

The second chapter describes midPoint big picture. It shows how midPoint looks like from the outside. It describes how midPoint what midPoint does, how it behaves. The purpose of this chapter is to familiarize the reader with midPoint workings and basic principles. It describes how midPoint is used.

The third chapter describes basic concepts of midPoint configuration. It guides the reader through midPoint installation. It describes how midPoint is customized, to suit the needs of a particular deployment. However, midPoint customization is a very complex matter, therefore this chapter describes just the basic principles. It will take most of the book to fill in the details.

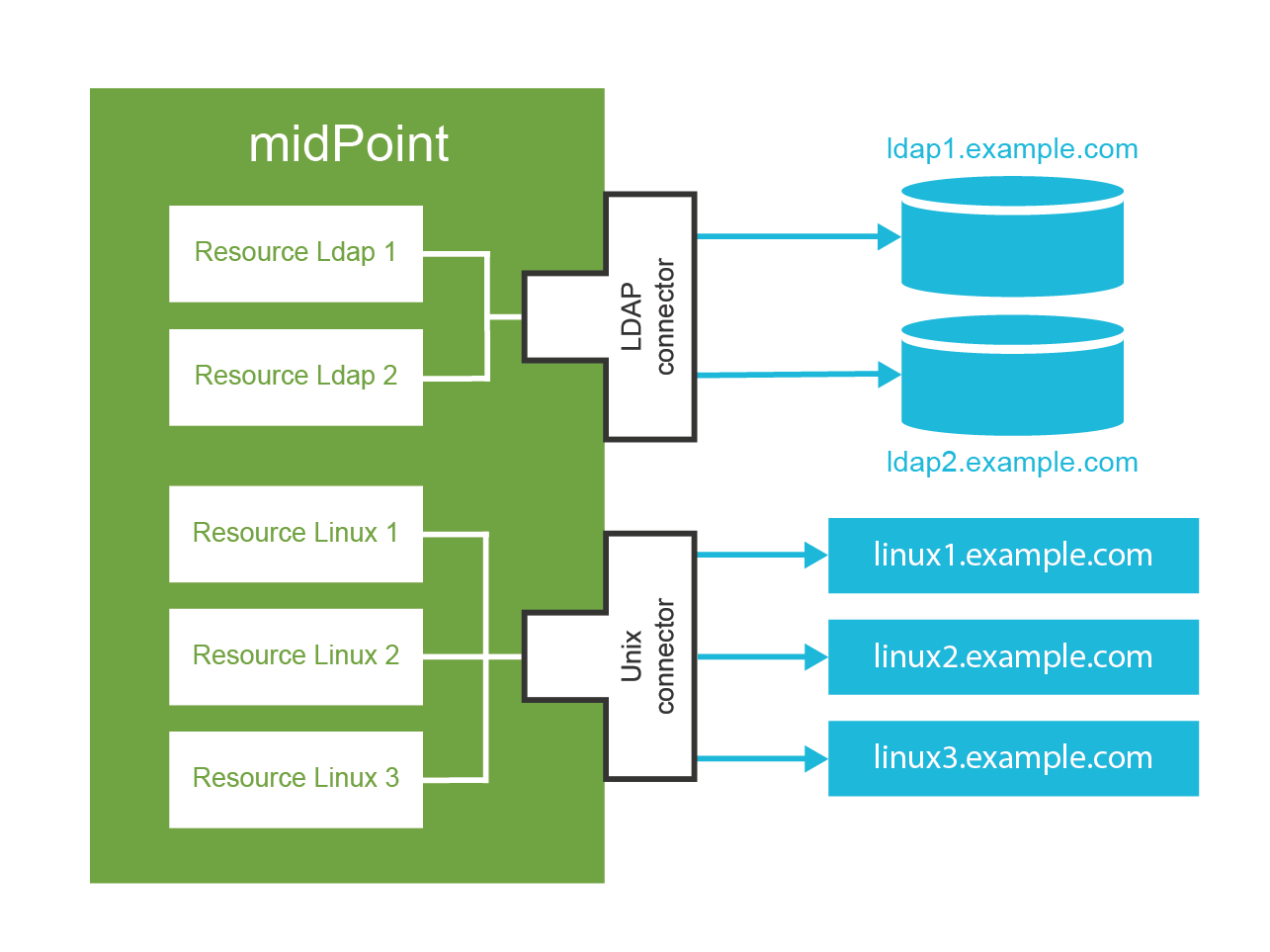

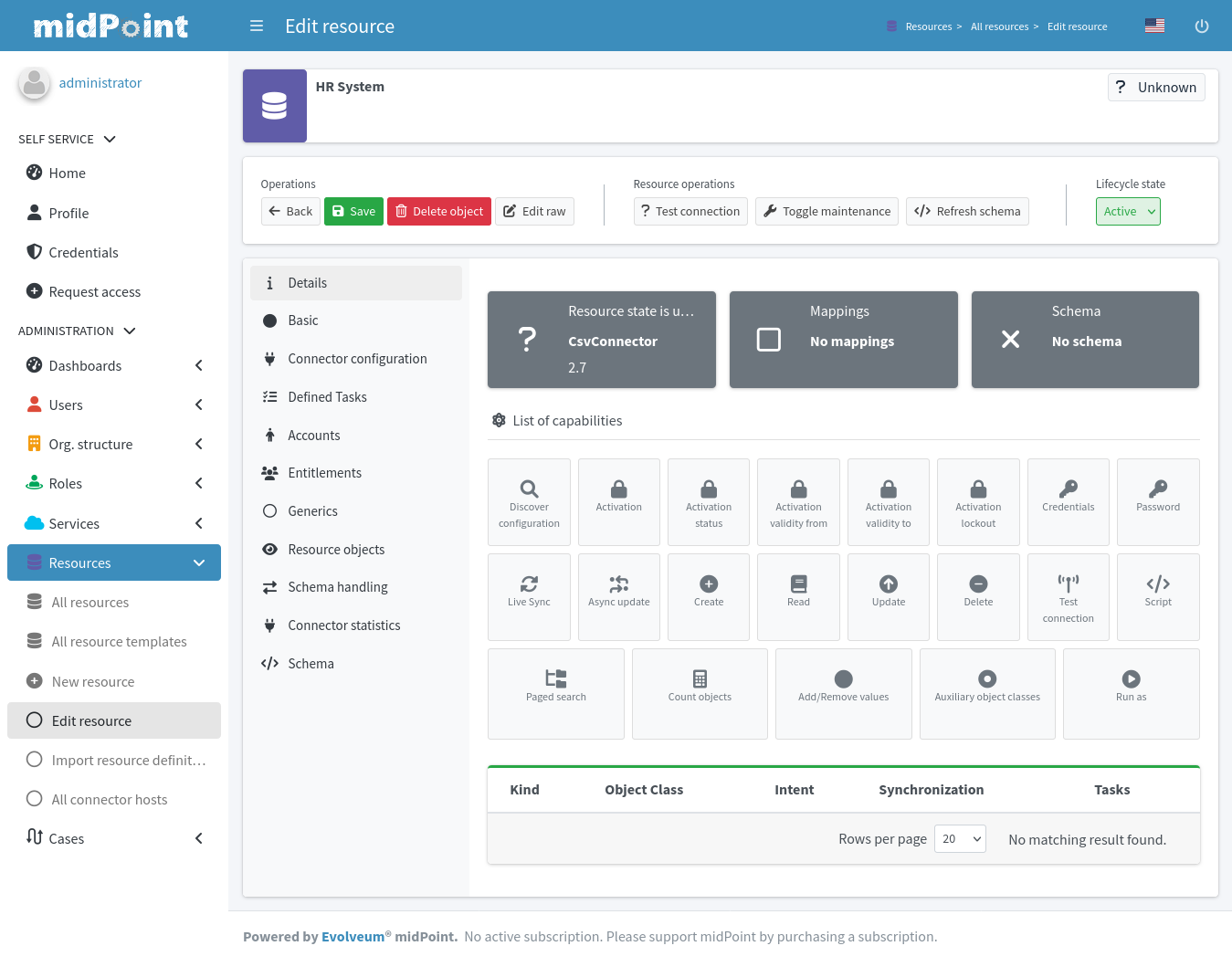

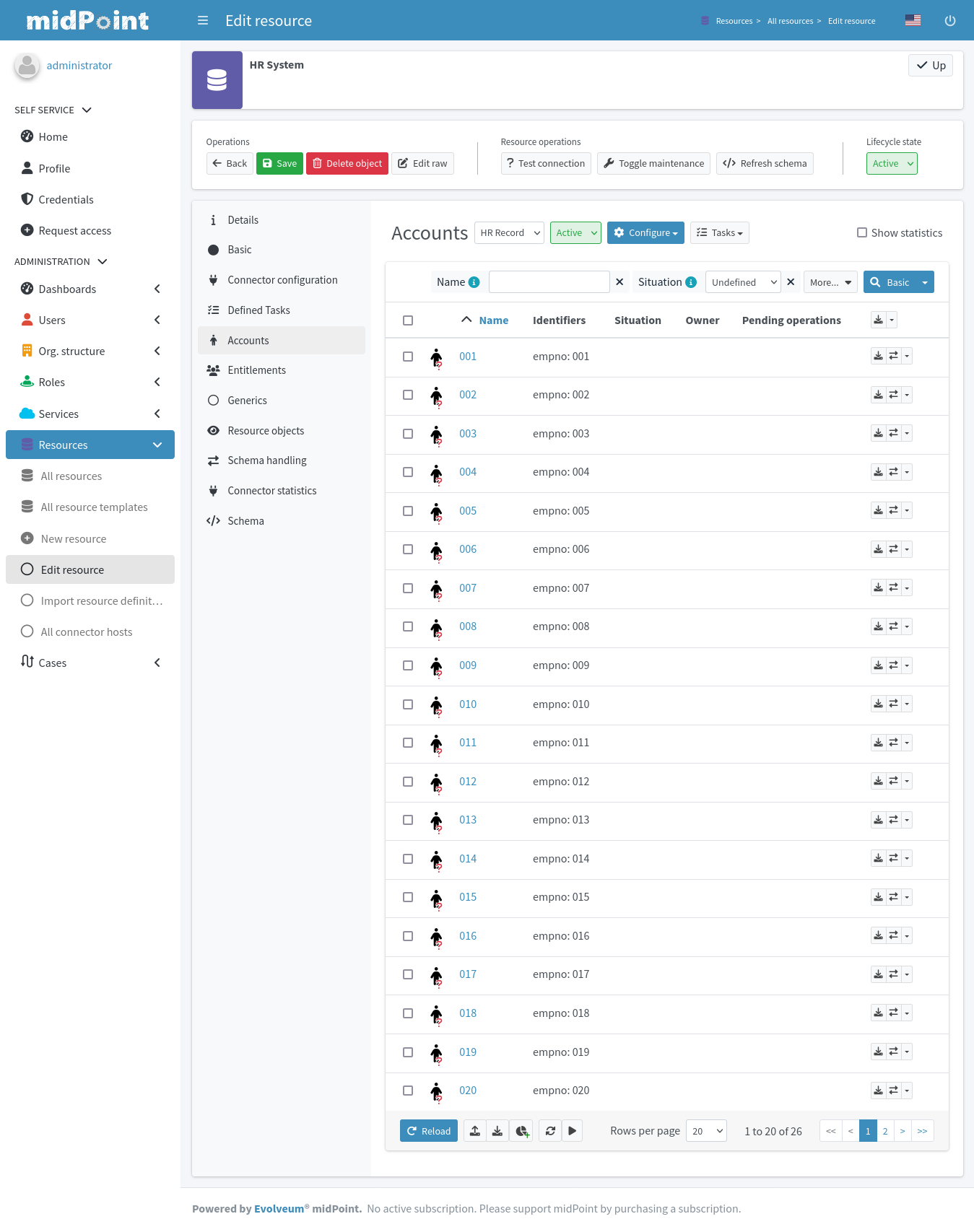

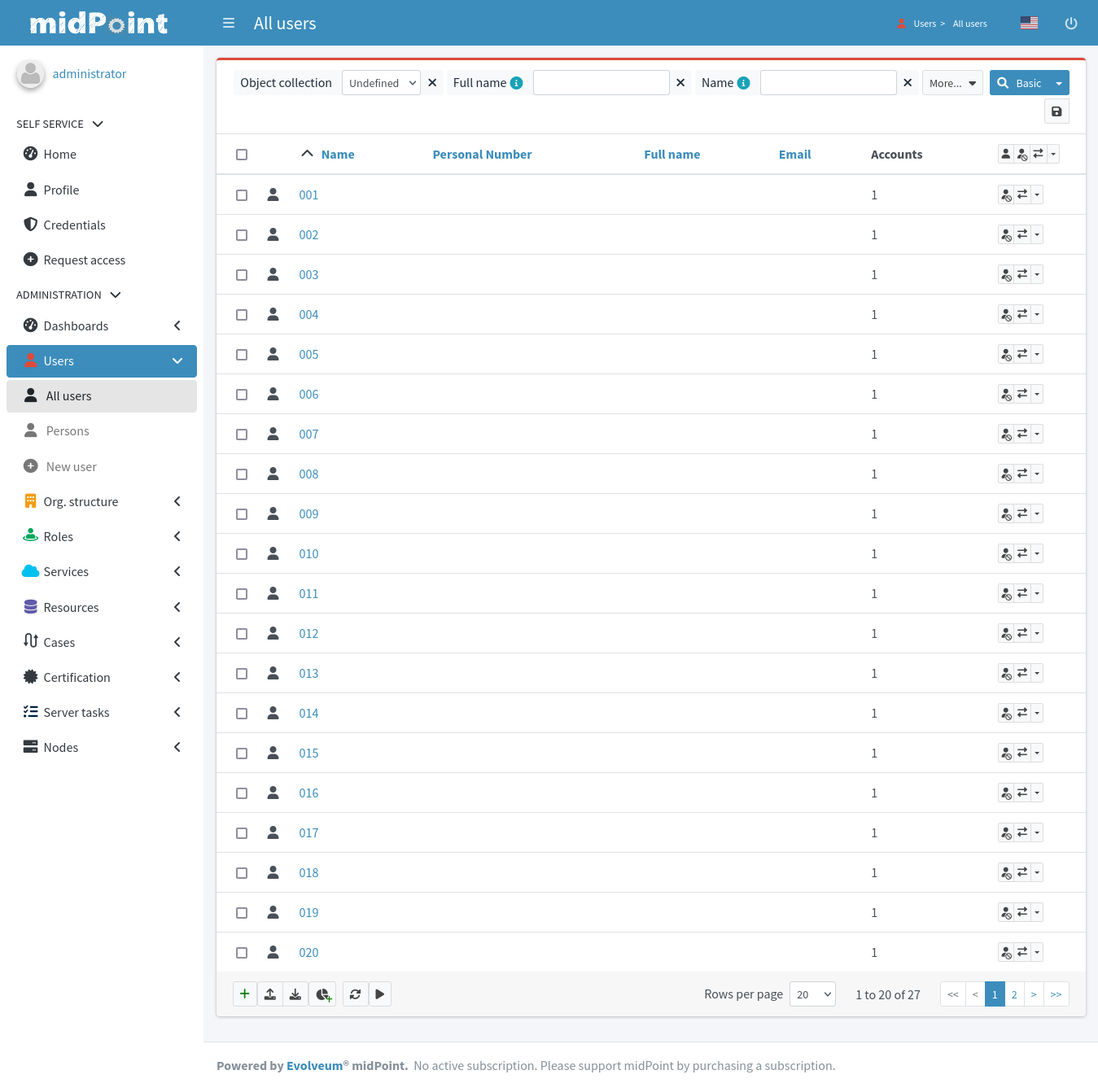

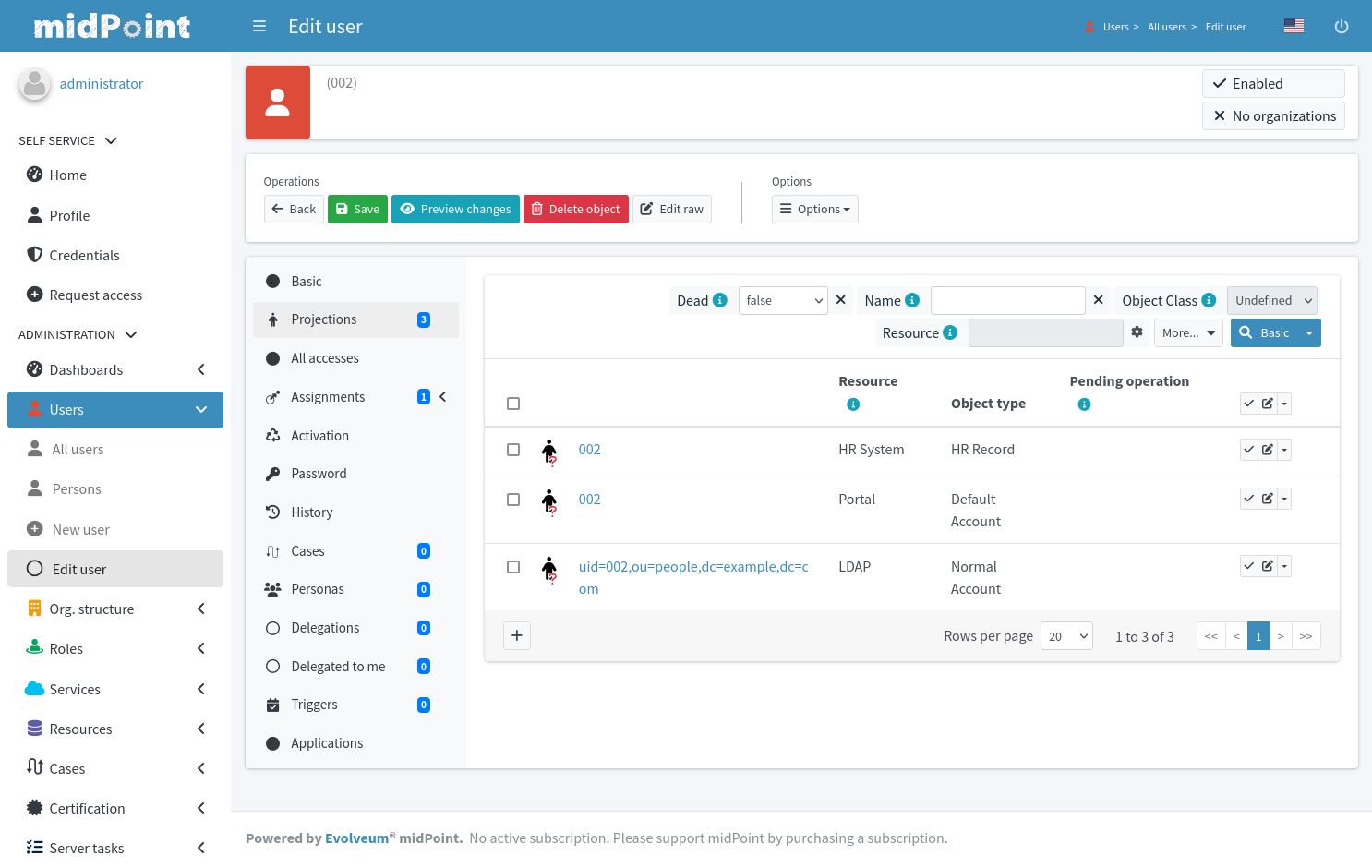

The fourth chapter describes the concepts of identity resource and mappings. This is the bread-and-butter of identity management. This chapter will tell you how to create very basic midPoint deployment, how to connect target systems and how to map and transform the data.

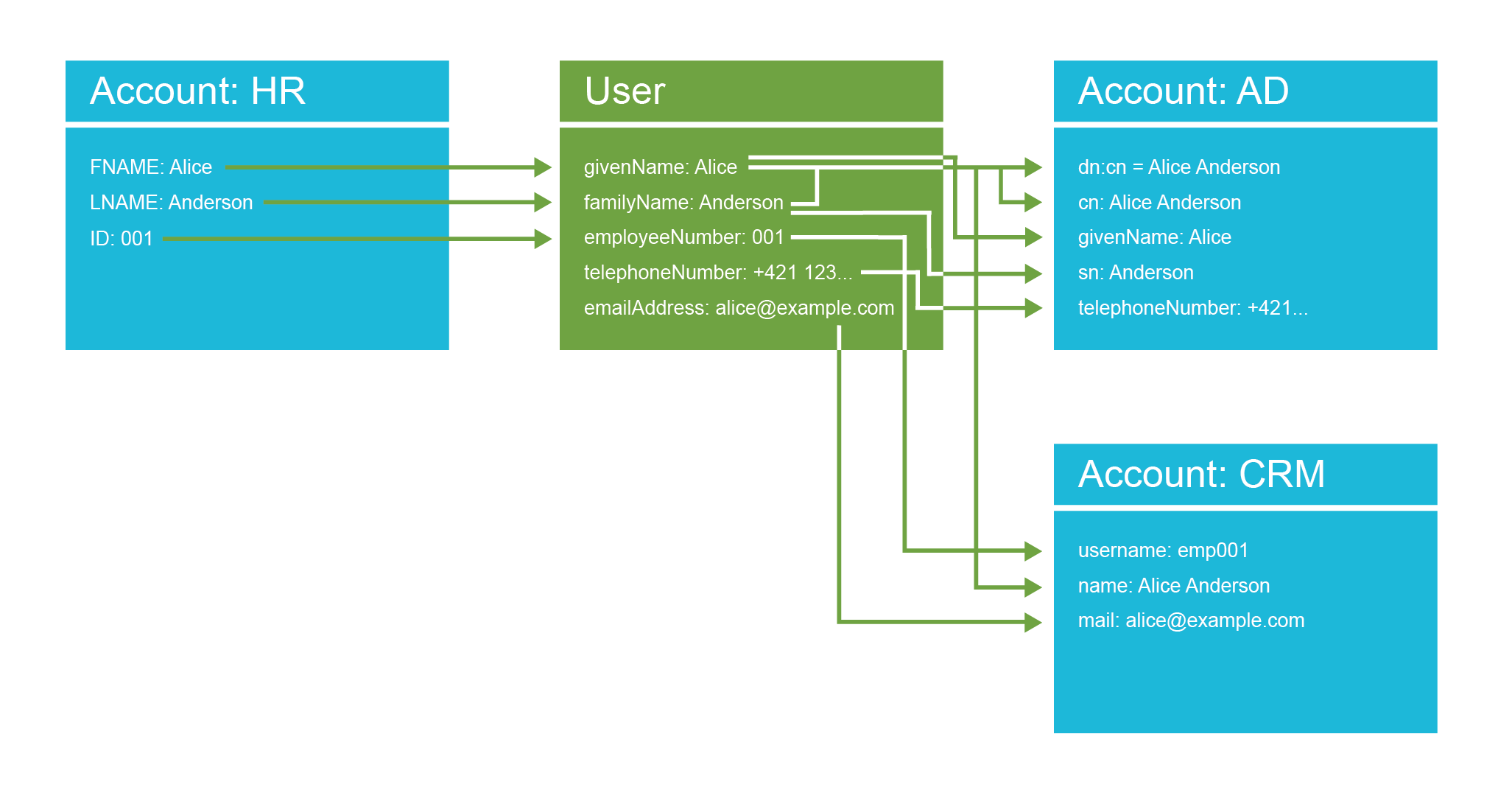

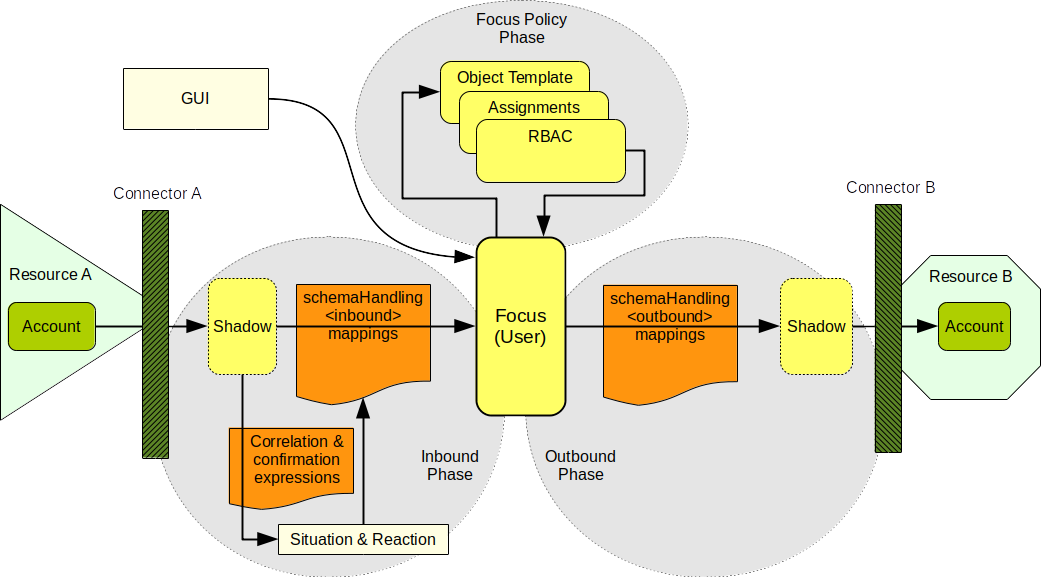

The fifth chapter is all about synchronization. Primary purpose of synchronization is to get the data from the source systems (such as HR system) to midPoint. However, midPoint synchronization is much more powerful than that, as you will see. This chapter also expands the explanation of underlying midPoint principles such as mappings and deltas.

The sixth chapter talks about midPoint schema. MidPoint has a built-in identity data model. Even though this data model is quite rich, it is usually not sufficient to cover all the real-world use cases. Therefore, the data model is designed to be extensible. This chapter describes the methods how a new data items can be defined in midPoint schema.

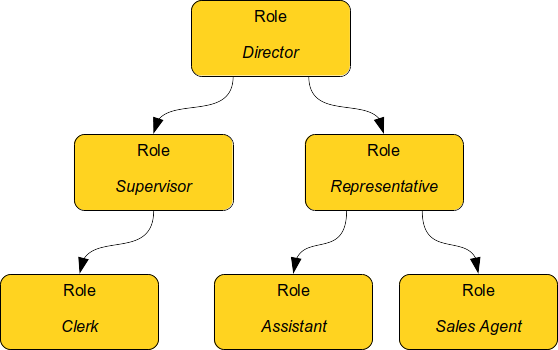

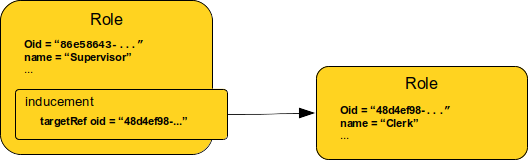

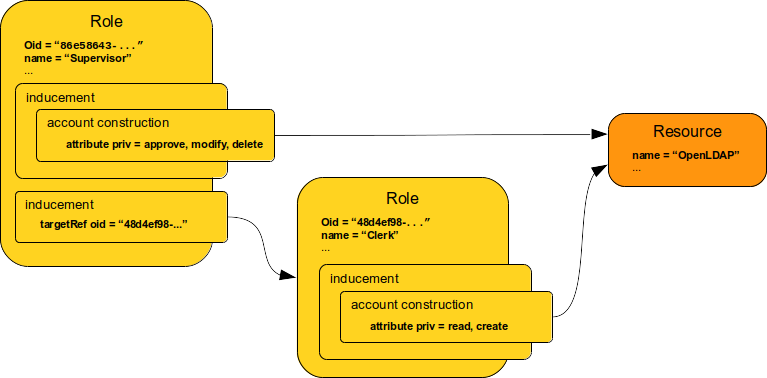

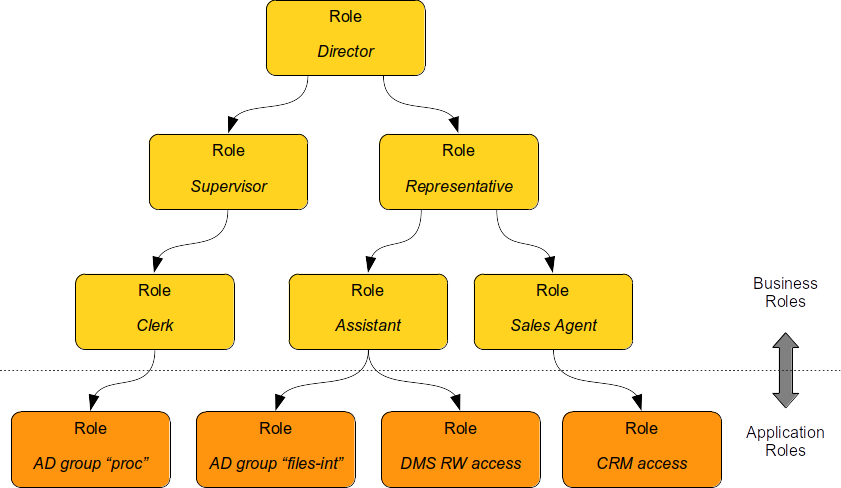

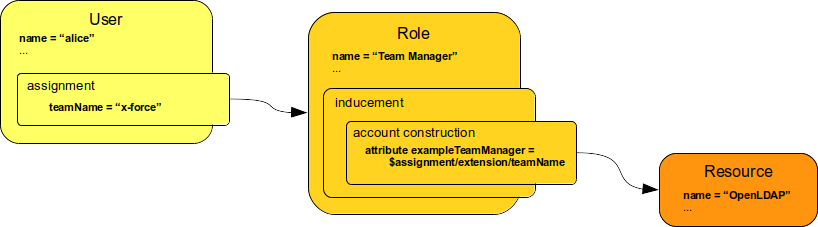

The seventh chapter is all about role-based access control (RBAC). MidPoint role-based model is a very powerful tool to set up complex structures describing job roles, responsibilities, privileges and so on. The role model, and especially the concept of assignment, are generic mechanisms that are used in almost every part of midPoint. Organizational structure management and many identity governance features are built on the foundations described in this chapter.

The eighth chapter is an introduction to object templates. Those templates form a basis of an internal data consistency in midPoint. They can be used to set up simple policies and automation rules. Object templates are a basic workhorse that is used in almost all midPoint deployments.

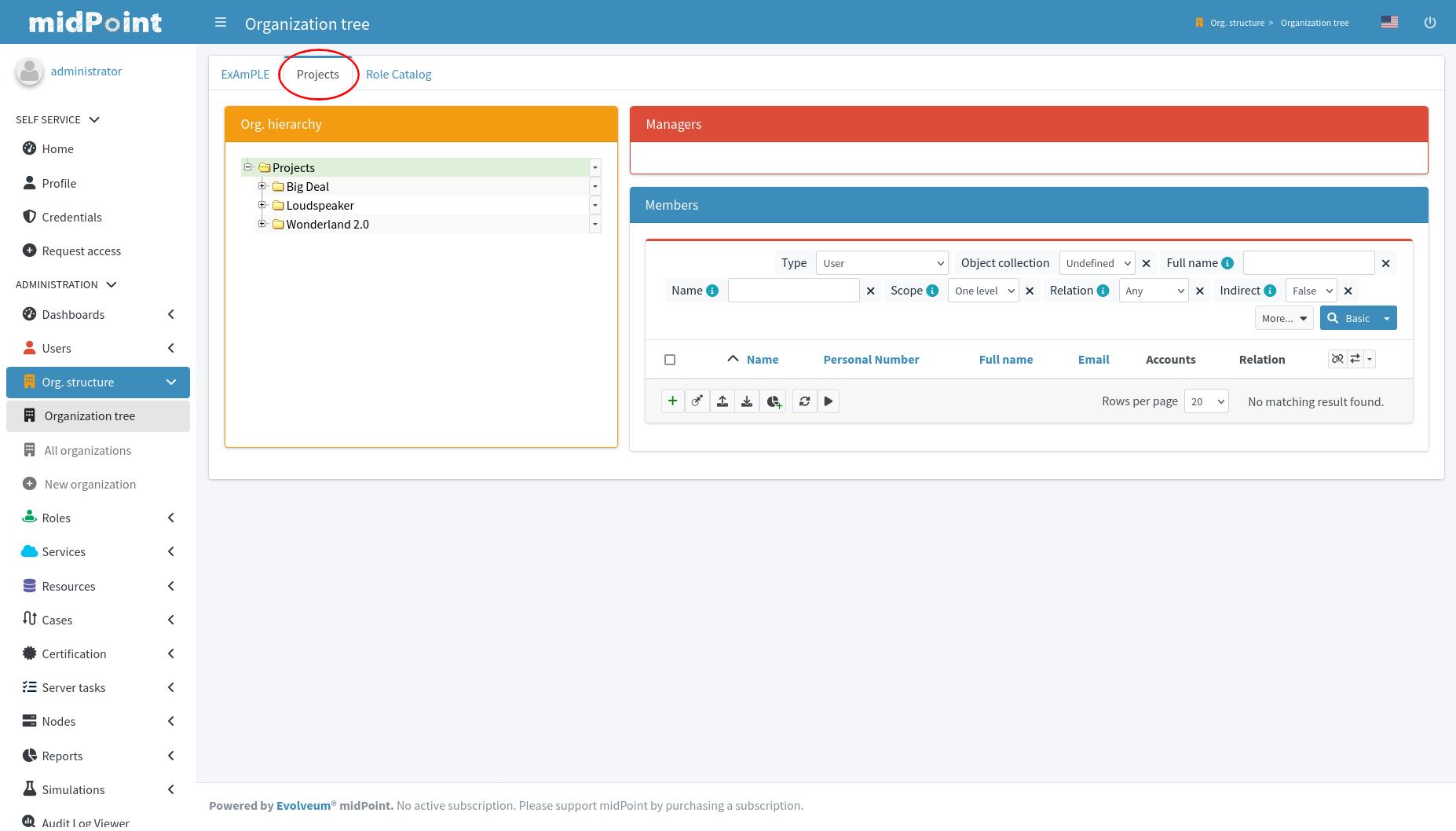

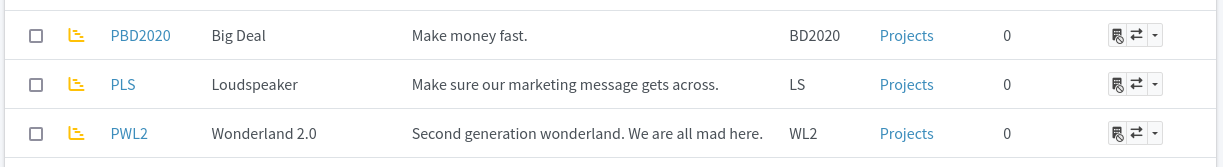

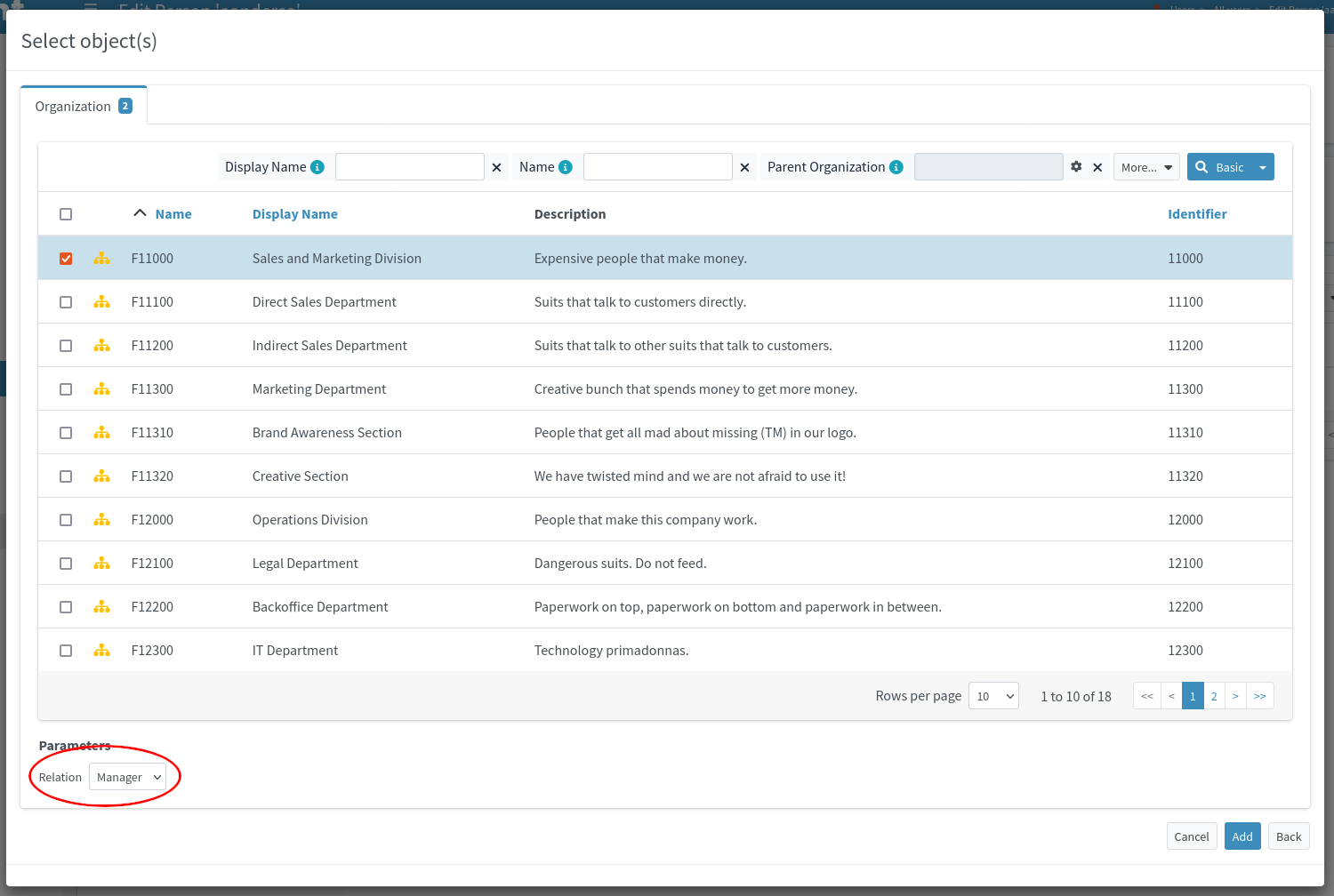

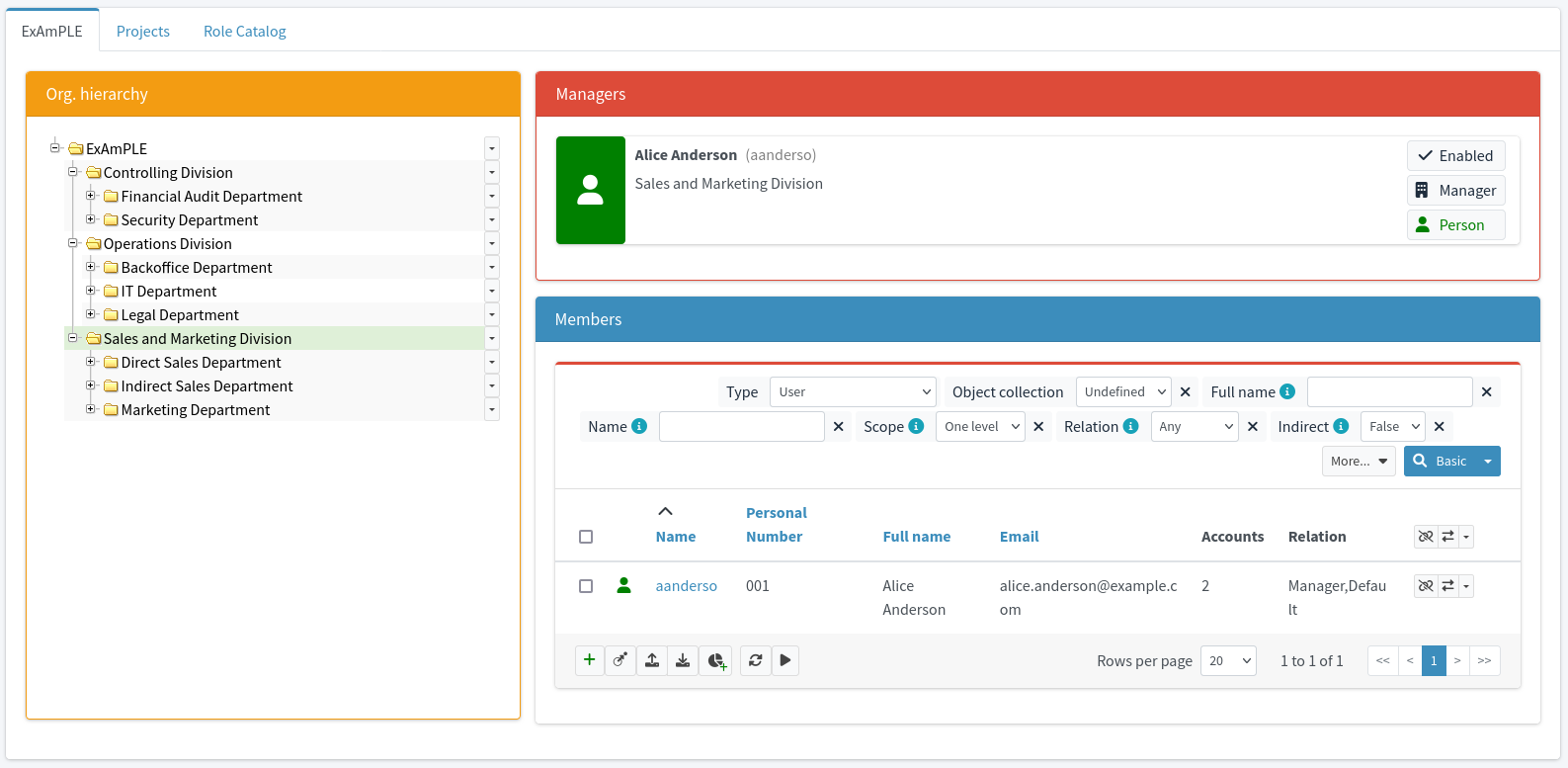

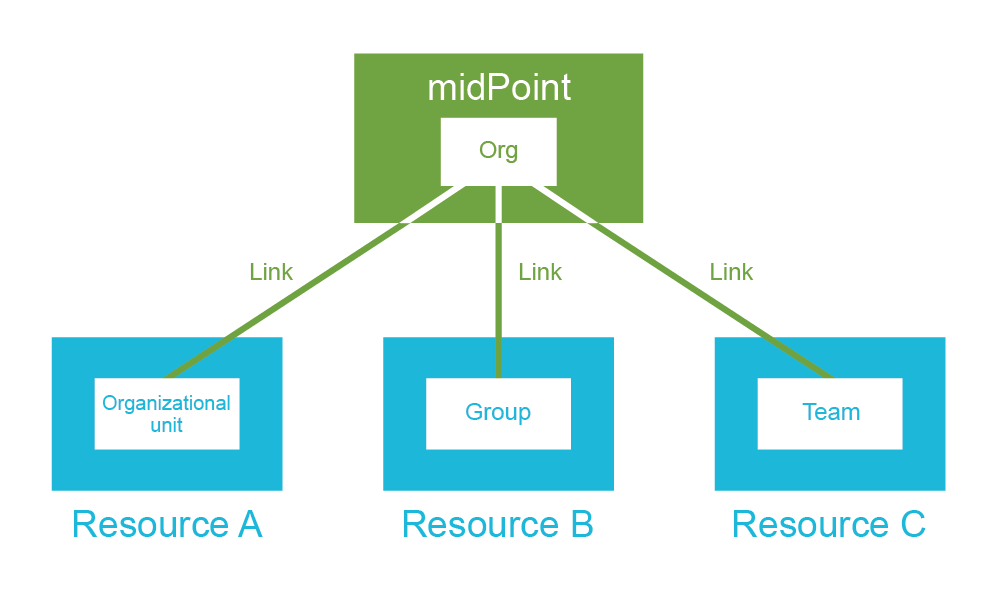

The ninth chapter describes organizational structures. MidPoint organizational structure mechanisms are generic and very powerful. They can be used to model traditional organizational hierarchies, arbitrary trees, and even structures that are not exactly trees. The same mechanism can be used to set up projects, teams, workgroups, classes or almost any conceivable grouping concept. This chapter describes how organizational structures are synchronized with the outer world. The concept of generic synchronization can be applied to synchronize midPoint objects with almost any external data structure.

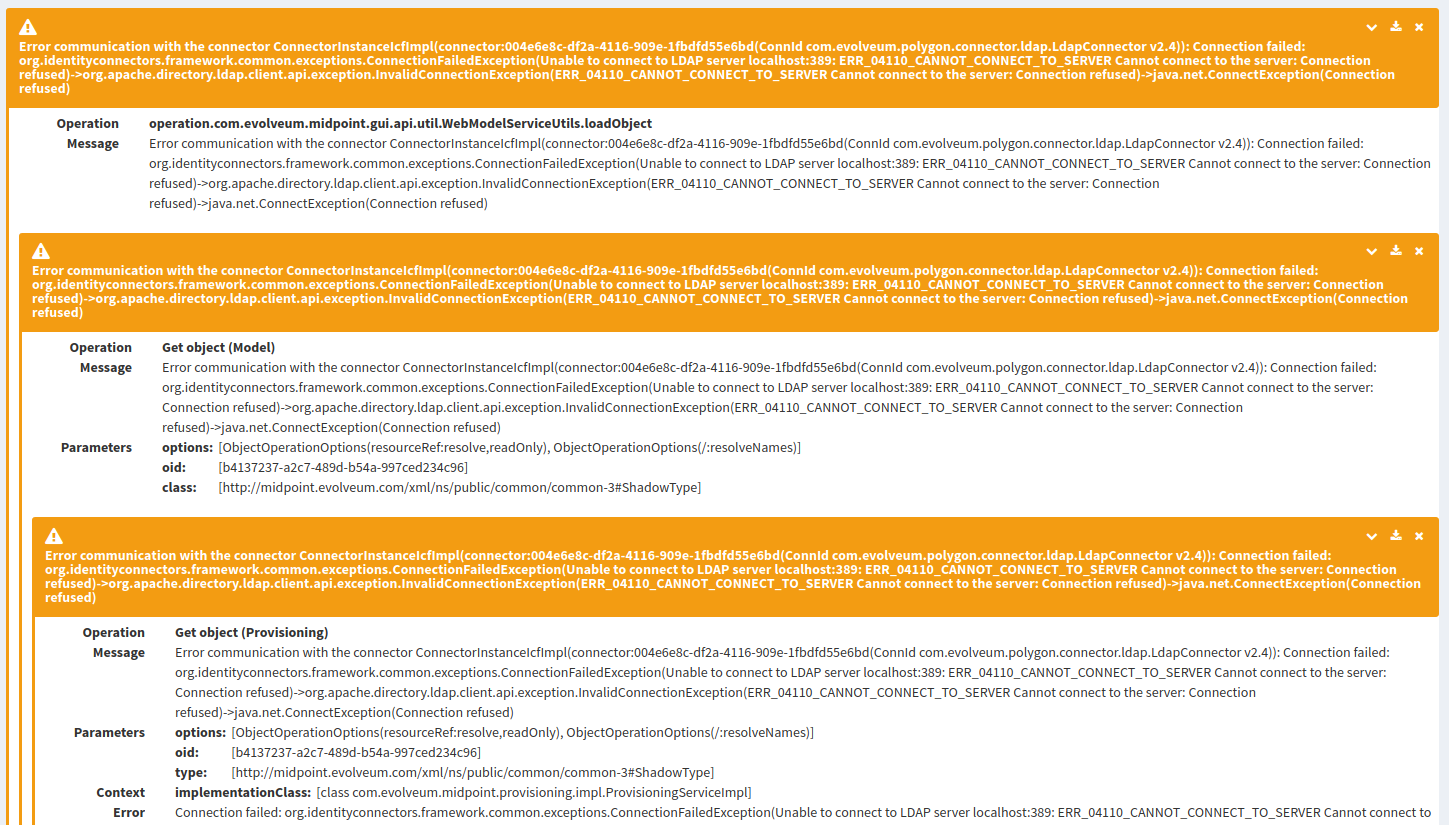

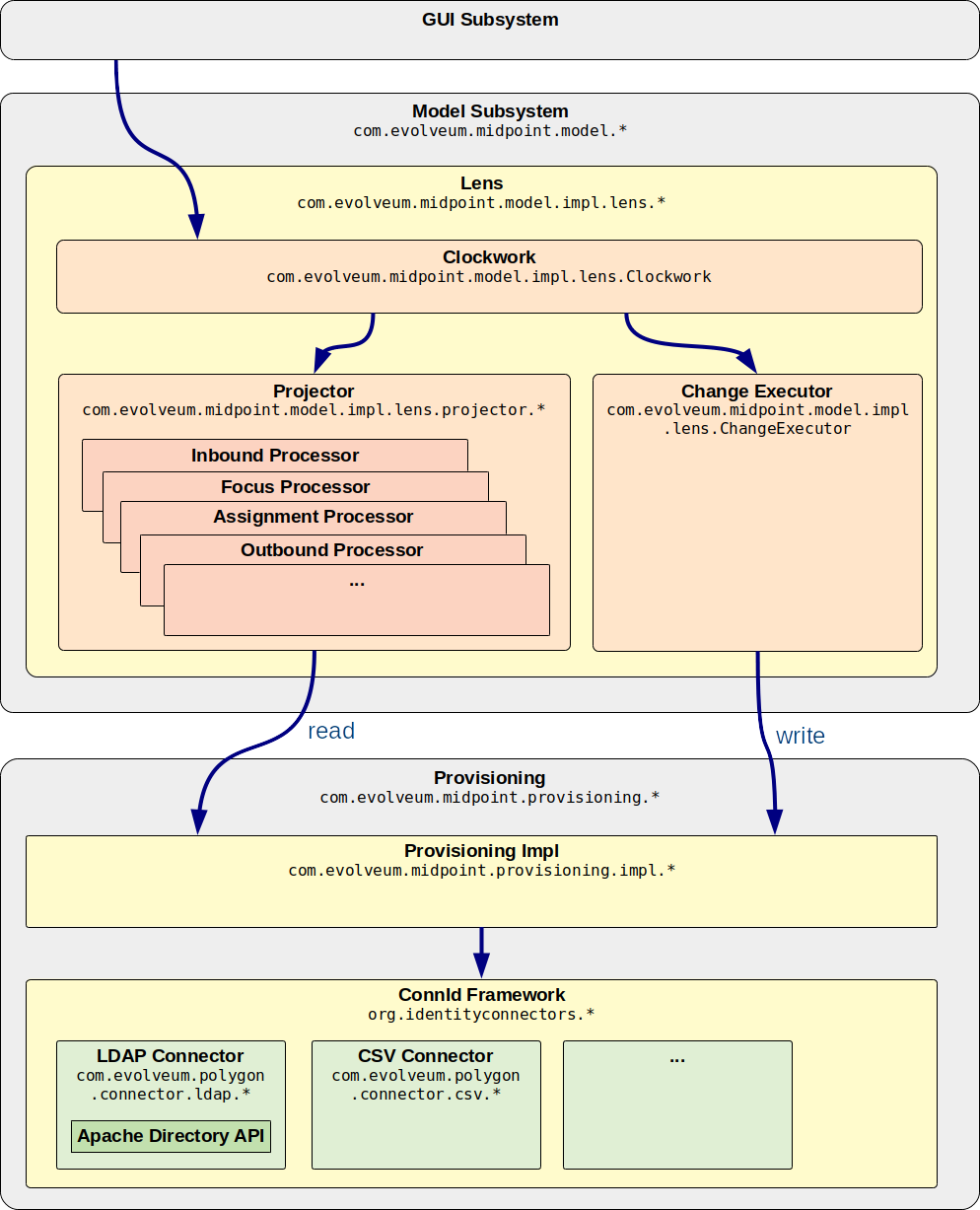

The tenth chapter is about troubleshooting. To err is human. Given all the flexibility of midPoint mechanisms, configuration mistakes just happen, and it may not be easy to figure out the root cause of problems. Therefore, this chapter provides an overview of midPoint diagnostic facilities and recommendations for their use.

The eleventh chapter provides overview of midPoint development process and overall approach. It is also explained how midPoint development is funded and how midPoint subscriptions work.

The twelfth chapter is a collection of pointers to additional information. This includes a pointer to sample files that accompany this book.

Finally, there is a glossary, explaining all the strange and confusing terms used in identity management and governance field.

The other chapters are not written yet. The description of policies, entitlements, authorizations, archetypes, deployment practices and all the other advanced topics is missing. This book is not finished yet. Just like midPoint itself, this book is written in an incremental and iterative way. Writing a good book is a huge task in itself, and it takes a lot of time. We cannot dedicate that much time to writing the book in one huge chunk. Obviously, a book like this is needed for midPoint community. Therefore, we have decided not to wait until the book is complete. We will be continuously publishing those chapters that are reasonably well finished. It is better to have something than to have nothing, isn’t it? Please be patient. The whole book will be finished eventually. As always, your support, contributions and sponsoring may considerably speed up things here

Even though the first version of this book was published in 2015, we are regularly updating the book to reflect new midPoint versions as well as changes in IT environment and trends. Similarly to midPoint itself, the book has to be kept up-to-date to deliver value. The book is usually reviewed at the time new long-term-support (LTS) version of midPoint is released.

We would like to thank all the midPoint developers, contributors and supporters. There was a lot of people involved in midPoint during all these years. All these people pushed midPoint forward. Most of all, we would like to thank the people that were there when the midPoint project was young, people that set midPoint on its path. We would like to thank Katka Stanovská, Katka Bolemant (née Valaliková), Igor Farinič, Ivan Noris, Vilo Repáň, Pavol Mederly and Radovan Semančík.

Anything that is stated in this book are the opinions of the authors. We have tried really hard to remain objective. However, as hard as we might try, some points of view are difficult to change. We work for Evolveum – a company that is also an independent software vendor. Therefore, our opinions may be slightly biased. We have honestly tried to avoid any biases and follow proper engineering practices. You are the judge and the jury in this matter. You, the reader, will decide whether we have succeeded or not. You have free access to all the necessary information to do that: this book is freely available as is all the midPoint documentation and the source code. We are not hiding anything. Unlike many other vendors, we do not want or need to hide any aspect of the software we are producing.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (CC BY-NC-ND). This essentially means that you can freely use this book for a personal use. You can retrieve and distribute it at no cost. You can use this book freely to learn about midPoint. However, you are not allowed to sell it, modify it or use any parts of this book in commercial projects. You can point to this book by URL, but you are not allowed to pass this book to the customer as a part of product documentation in a commercial project. You are not allowed to use this book as material during commercial training. You are not allowed use the book in any way that generates profit. If you need to use this book in such a way, please contact Evolveum, and you can obtain special license to do this. The license fees collected in this way will be used to improve midPoint and especially midPoint documentation. There is no direct profit that we make from this book. The primary reason for writing this book is spreading knowledge about midPoint. However, even open source projects such as midPoint need funding. If you use midPoint in a commercial project that is a source of profit we think it is only fair if you share part of that profit with midPoint authors. You know as well as we do that this is needed.

Following people have worked on the words and images that make up this book:

-

Radovan Semančík (author and maintainer)

-

Veronika Kolpaščiková (illustrations, corrections)

-

Richard Richter (corrections, suggestions)

Yet there is much more people whose work was needed to make this work happen: midPoint developers, contributors, analysts and deployment engineers, specialists and generalists, theoretical scientists and practical engineers, technical staff and business people, people of Evolveum and the people that work for our partners, our families, friends and all the engineers and scientists for generations and generations past. We indeed stand on the shoulders of giants.

1. Understanding Identity and Access Management

The beginning of knowledge is the discovery of something we do not understand.

What is identity and access management? Answer to that question is both easy and complex. The easy part is: identity and access management (IAM) is a set of information technologies that deal with identities in the cyberspace. The complex part of the answer takes the rest of this book.

The story of identity and access management starts with information security. The security requirements dictate the need for authentication and authorization of the users. Authentication is a mechanism by which the computer checks that the user is really the one they pretend to be. Authorization is a related mechanism by which the computer determines whether to allow or deny the user a specific action. Almost every computer system has some means of authentication and authorization.

Perhaps the most widespread form of authentication is a password-based "log in" procedure. The user presents an identifier and a password. The computer checks whether the password is valid. For this procedure to work the computer needs access to the database of all valid users and passwords. Early stand-alone information systems had their own databases that were isolated from the rest of the cyberspace. The data in the database were maintained manually. However, the advent of computer networking changed everything. Users were able to access many systems, and the systems themselves were connected to each other. Maintaining an isolated user database in each system did not make much sense any longer. That’s where the real story of digital identity begins.

|

Enterprise Identity and Access Management

This book deals mostly with Enterprise Identity and Access Management.

That is identity and access management applied to larger organizations such as enterprises, financial institutions, government agencies, universities, health care organizations, etc.

The focus is on managing employees, contractors, customers, partners, students and other people that cooperate with the organization.

However, many of the mechanisms and principles described in this book can be applied to non-enterprise environments.

|

Directory Services and Other User Databases

The central concept of identity management is a data record which contains information about a person. This concept has many names: user profile, persona, user record, digital identity and many more. The most common name in the context of identity management is user account. Accounts usually hold information that describes the real-world person using a set of attributes such as given name and family name. However, probably the most important part of the account is the technical information that relates to the operation of an information system for which the account is created. This includes operational parameters such as location of user’s home directory, wide variety of permission information such as group and role membership, system resource limits and so on. User accounts are represented in a wide variety of forms, ranging from relational database records through structured data files to semi-structured text files. Regardless of the specific method used to store and process the records, the account is undoubtedly one of the most important concepts of IAM field - and so are the databases where the accounts are stored.

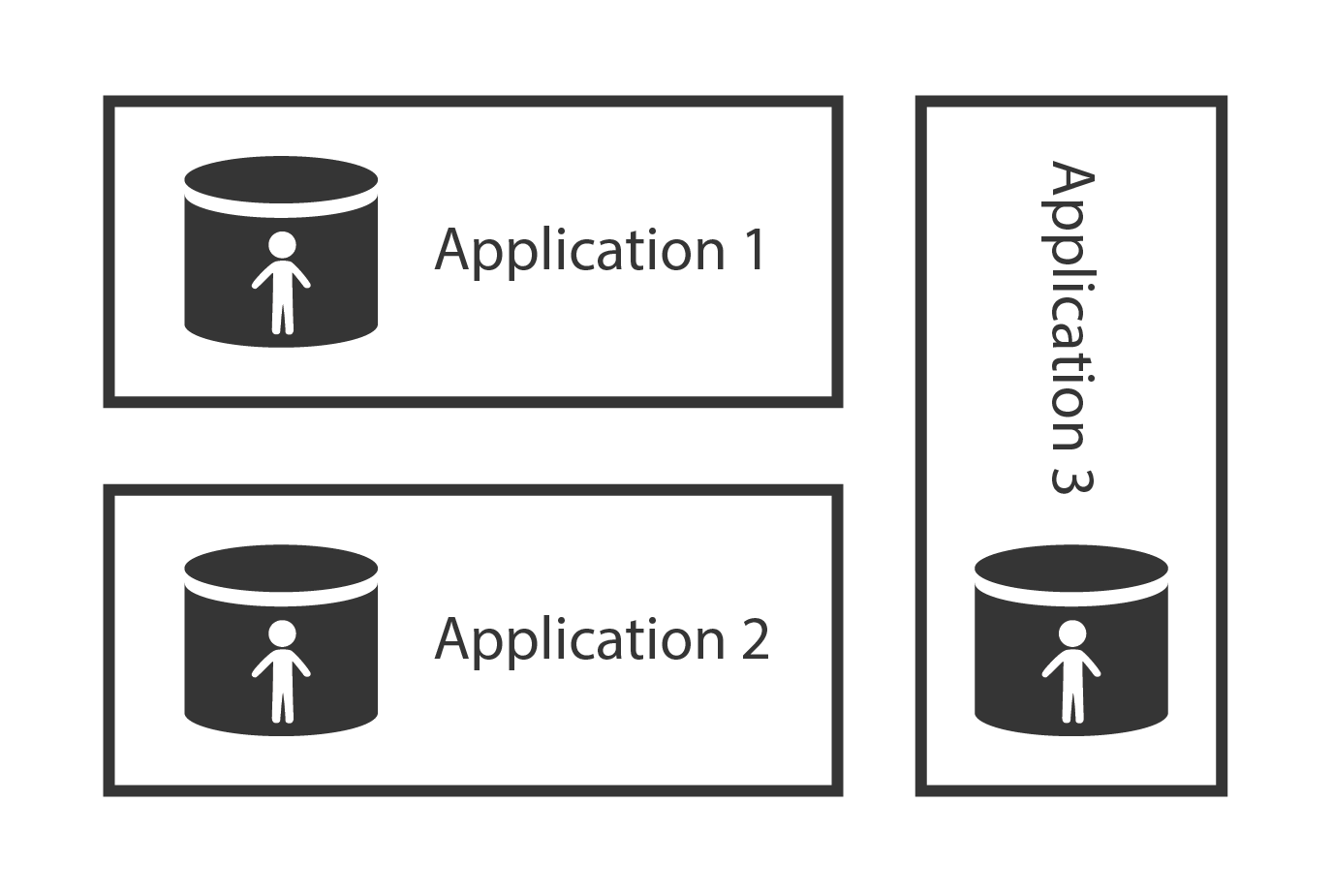

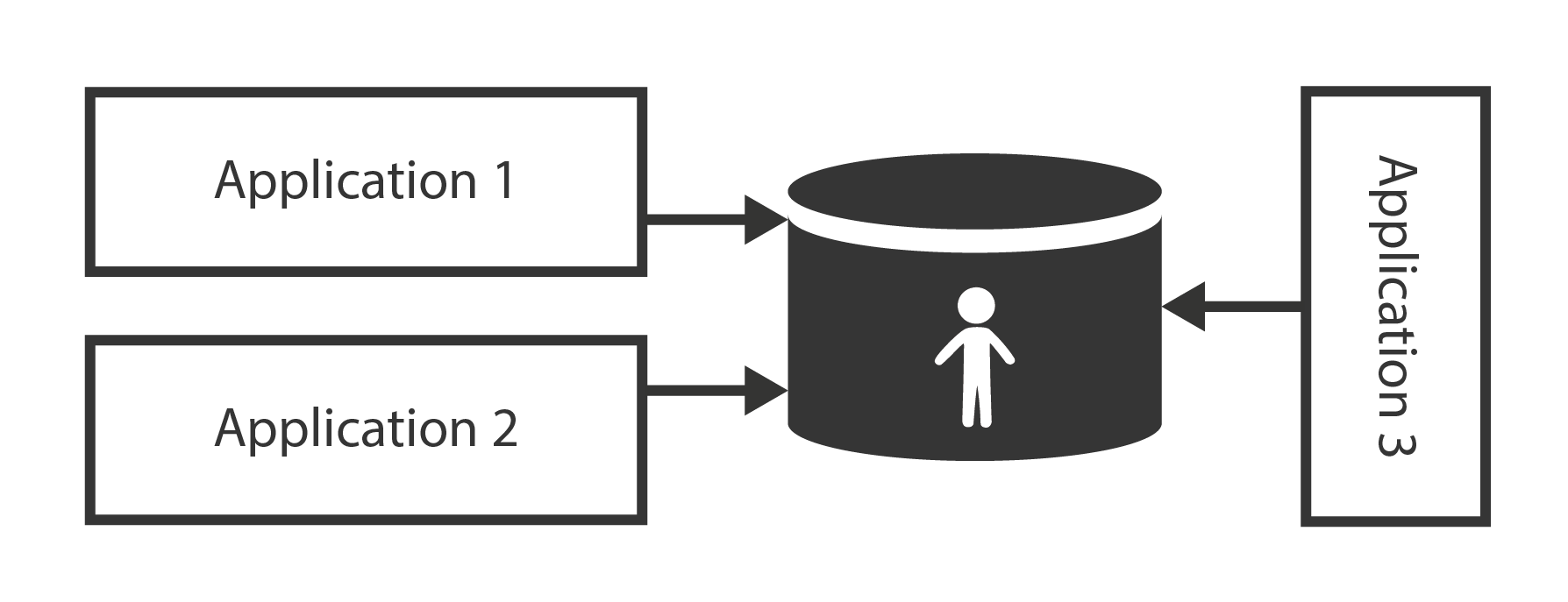

The account databases are as varied as the account types. Most account databases in the past were implemented as an integral part of the monolithic information system using the same database technology as the system used. This is an obvious choice, and it remains quite popular even today. Therefore, many accounts are stored in relational database tables and similar application data stores.

Application data stores are usually tightly bound to the application. Therefore, accounts stored in such databases are difficult to share with other applications. However, sharing account data across the organization is more than desirable. It makes very little sense to maintain account data in each database separately – especially if most the accounts are the same in each application. Therefore, there is a strong motivation to deploy account databases that can be shared by many applications.

Directory servers are built with the primary purpose to provide shared data storage to applications. While application databases usually use their own proprietary communication protocol, directory servers implement standardized protocols. While databases are built for application-specific data model, directory servers usually extend standardized data model, which improves interoperability. While databases may be heavyweight and expensive to scale, directory servers are designed to be lightweight and provide massive scalability. That makes directory servers almost ideal candidates for a shared account database.

Shared identity store is making user management easier. An account needs to be created and managed in one place only. Authentication still happens in each application separately. Yet, as the applications use the same credentials from the shared store, the user may use the same password for all the connected applications. This is an improvement over setting the password for each application separately.

Identity management solutions based on shared directory servers are simple and quite cost-efficient. Therefore, we have been giving the same advice for many years: if you can connect all your applications to an LDAP directory server, do not think too much about it and just do it. The problem is that this usually works only for very simple systems.

Lightweight Directory Access Protocol (LDAP)

Lightweight Directory Access Protocol (LDAP) is a standard protocol for accessing directory services. It is an old protocol, when judging by Internet age standards. LDAP roots go as far back as 1980s to a family of telecommunication protocols known as X.500. Even though LDAP may be old, it is widely used. It is a very efficient binary protocol that was designed to support massively distributed shared databases. It has a small set of well-defined simple operations. The operations and the data meta-model implied by the protocol allow very efficient data replication and horizontal scalability of directory servers. This simplicity contributes to low latencies and high throughput for read operations. The horizontal scalability and relative autonomy of directory server instances is supposed to increase the availability of the directory system. These benefits often come at the expense of slow write operations. As identity data are often read but seldom modified, slower writes are usually a perfectly acceptable trade-off. Therefore, LDAP-based directory servers were, and in many places still remain, the most popular databases for identity data.

LDAP is one of the precious few established standards in the IAM field. However, it is far from being perfect. LDAP was created in 1990s, with roots going back to 1980s. There are some problems in original LDAP design, that were never fully addressed. Also, LDAP schema has a distinctive feel of 80s and 90s. LDAP would deserve a major review, to correct the problems and bring the protocol to 21st century. Sadly, there hasn’t been a major update to LDAP specifications for decades.

Even though LDAP has its problems, it still remains a useful tool. Most LDAP server vendors provide proprietary solutions to LDAP problems. Many organizations store identities in LDAP-enabled data stores. There are many applications that support LDAP, mostly for centralization of password-based authentication. LDAP still remains a major protocol in Identity and Access Management field. Therefore, we will be getting back to the LDAP protocol many times in this book.

Directory Servers are Databases

Directory servers are just databases that store information. Nothing more. The protocols and interfaces (APIs) used to access directory servers are designed as database interfaces. They are good for storing, searching and retrieving data. While the user account data often contain entitlement information (permissions, groups, roles, etc.), directory servers are not well-suited to evaluate them. I.e. directory server can provide information what permissions an account has, but it is not designed to make a decision whether to allow or deny a specific operation. Also, directory servers do not contain data about user sessions. Directory servers do not know whether the user is currently logged in or not. Many directory servers are used for basic authentication and even authorization. Yet, the directory servers were not designed to do this. Directory servers provide only the very basic capabilities. There are plug-ins and extensions that provide partial capabilities to support authentication and authorization. However, that does not change the fundamental design principles. Directory servers are databases, not authentication or authorization servers. Being databases, they should be used as such.

However, many applications use directory servers to centralize password authentication. In fact, this is somehow good and cost-efficient way to centralize password-based authentication, especially if you are just starting with identity and access management. Nevertheless, you should be aware that this a temporary solution. It has many limitations. The right way to do it is to use an authentication server instead of directory server. Access Management (AM) technologies can provide that.

Single Directory Server Myth

Now listen, this is a nice and simple idea: Let’s keep all our user data in a single directory server. All our applications can access the data there, all the applications will see the same data. We even have this "LDAP" thing, standardized protocol to access the database. Here, all identity management problems are solved!

Unfortunately, they are not. Shared directory server makes user management easier. However, this is not a complete solution, and there are serious limitations to this approach. The heterogeneity of information systems makes it nearly impossible to put all required data into a single directory system.

The obvious problem is the lack of a single, coherent source of information. There are usually several sources of information for a single user. For example, a human resources (HR) system is authoritative for the existence of an employee in the enterprise. However, the HR system is usually not authoritative for assignment of employee identifier such as username. There needs to be an algorithm that ensures uniqueness of the username, ensuring uniqueness across all the current and past employees, contractors and partners. Moreover, there may be additional sources of information. For example Management information system may be responsible for determination of user’s roles (e.g. in project-oriented organizational structure). Inventory management system may be responsible for assigning telephone number to the user. The groupware system may be an authoritative source of the user’s e-mail address and other electronic contact data. There are usually 2 to 20 systems that provide authoritative information for a single user. Therefore, there is no simple way how to feed and maintain the data in the directory system.

Then there are spacial and technological barriers. Many complex applications need local user database. They must store the copies of user records in their own databases to operate efficiently. For example, large billing systems cannot work efficiently with external data (e.g. because of a need to make relational database join). Therefore, even if directory server is deployed, these applications still need to maintain a local copy of identity data. Keeping the copy synchronized with the directory data may seem like a simple task. But it is not. Additionally, there are legacy systems which usually cannot access the external data at all (e.g. they do not support LDAP protocol at all).

Some services need to keep even more state than just a simple database record. For example file servers usually create home directories for users. While the account creation can be usually done in on-demand fashion (e.g. create user directory at first user log-on), the modification and deletion of the account is much more difficult. Directory server will not do that.

Perhaps the most painful problem is the complexity of access control policies. Role names and access control attributes may not have the same meaning in all systems. Different systems usually have different authorization algorithms that are not mutually compatible. While this issue can be solved with per-application access control attributes, the maintenance of these attributes is seldom trivial. If every application has its own set of attributes to control access control policies, then the centralized directory provides only a negligible advantage. The attributes may as well reside in the applications themselves. That’s exactly how most directory deployments end up. Directory servers contain only the groups, groups that usually roughly approximate low-level RBAC roles.

Quite surprisingly, LDAP standards themselves create a significant obstacle to interoperability in this case. There are at least three or four different - and incompatible - specifications for group definition in LDAP directories. Moreover, the usual method to manage LDAP groups is not ideal at all. It is especially problematic when managing big groups. Therefore, many directory servers provide their own non-standard improvements, which further complicates interoperability. Yet even these server-specific improvements usually cannot support complex access control policies. Therefore, access control policies and fine-grained authorizations are usually not centralized in directory servers, they are maintained directly in the application databases.

The single directory approach is feasible only in very simple environments or in almost entirely homogeneous environments. In all other cases there is a need to supplement the solution by other identity management technologies.

This does not mean that the directory servers or other shared databases are useless. Quite the contrary. They are very useful if they are used correctly. They just cannot be used alone. More components are needed to build a complete solution.

Access Management

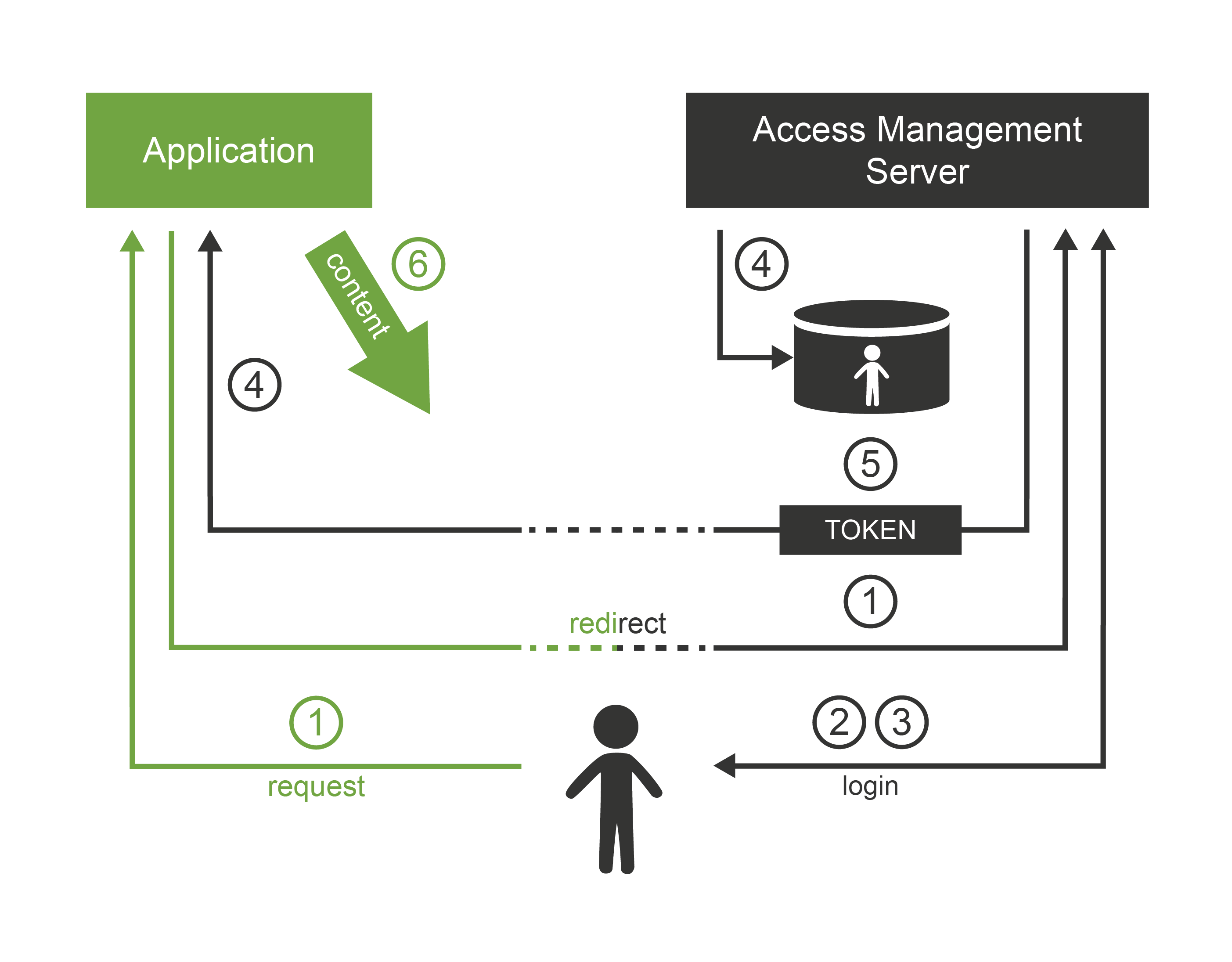

While directory systems are not designed to handle complex authentication, access management (AM) systems are built to handle just that. Access management systems handle all the flavors of authentication, and even some authorization aspects. The principle of all access management systems is basically the same:

-

Access management system gets between the user and the target application. This can be done by a variety of mechanisms, the most common method is that the applications themselves redirect the user to the AM system if they do not have existing session.

-

Access management system prompts user for the username and password, interacts with user’s mobile device or in any other way initiates the authentication procedure.

-

User enters the credentials.

-

Access management system checks the validity of credentials and evaluates access policies.

-

If access is allowed then the AM system redirects user back to the application. The redirection usually contains an access token: a small piece of information that tells the application that the user is authenticated.

-

Application validates the token, creates a local session and allows the access.

After that procedure, the user works with the application normally. Only the first access goes through the AM server. This is important for AM system performance and sizing, and it impacts session management functionality.

The applications only need to provide the code that integrates with the AM system. Except for that small integration code, applications do not need to implement any authentication code at all. It is the AM system that prompts for the password, not the application. This is a fundamental difference when compared to LDAP-based authentication mechanisms. In the LDAP case, it is the application that prompts for the password. In the AM case, the Access Management server does everything. Many applications do not even care how the user was authenticated. All they need to know is that he was authenticated and that the authentication was strong enough. This feature brings a very desirable flexibility to the entire application infrastructure. The authentication mechanism can be changed at any time without disrupting the applications. We live in an era when passwords are frowned upon and replaced by stronger authentication mechanisms. The flexibility that the AM-based approach brings may play a key part in that migration.

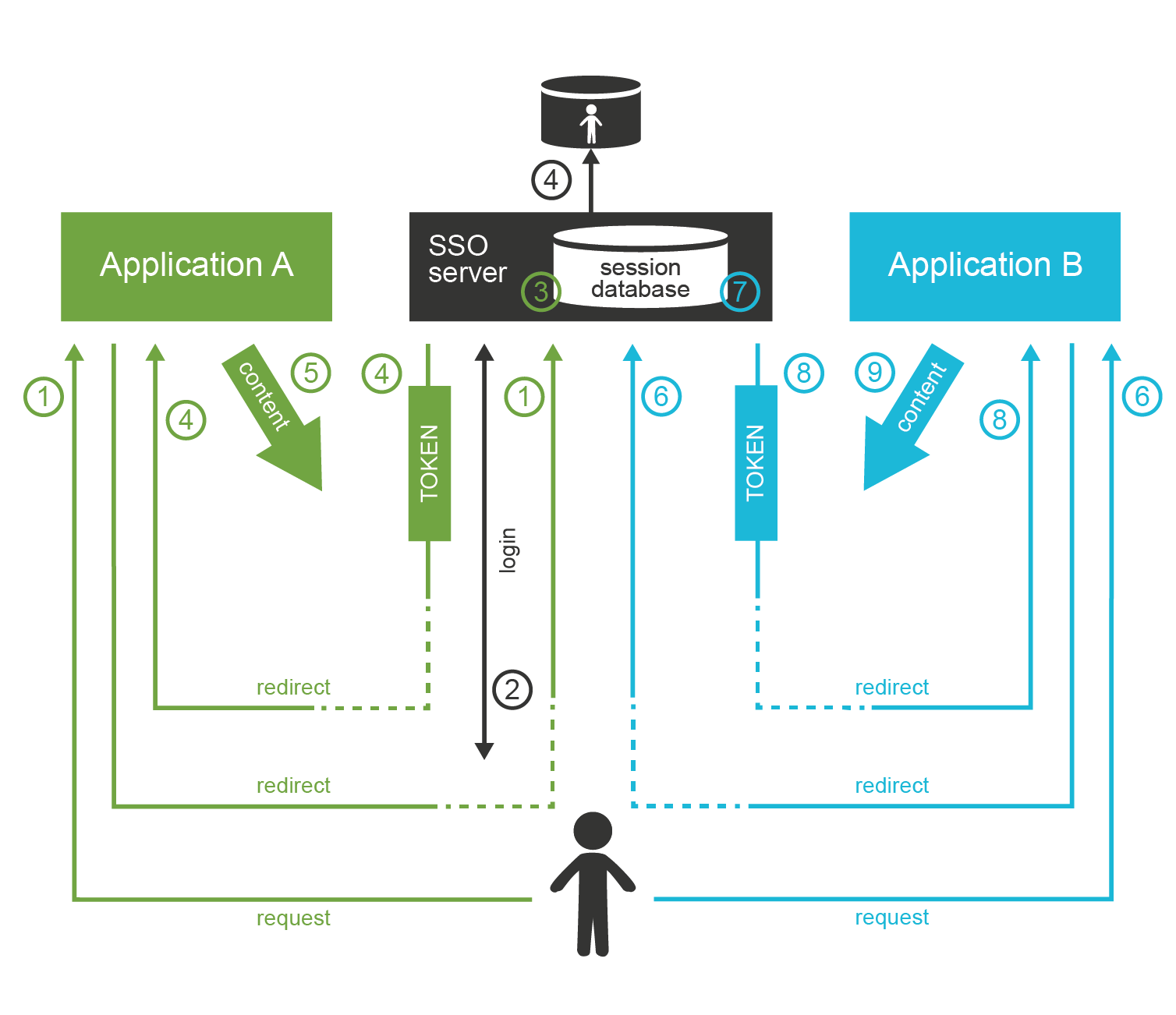

Web Single Sign-On

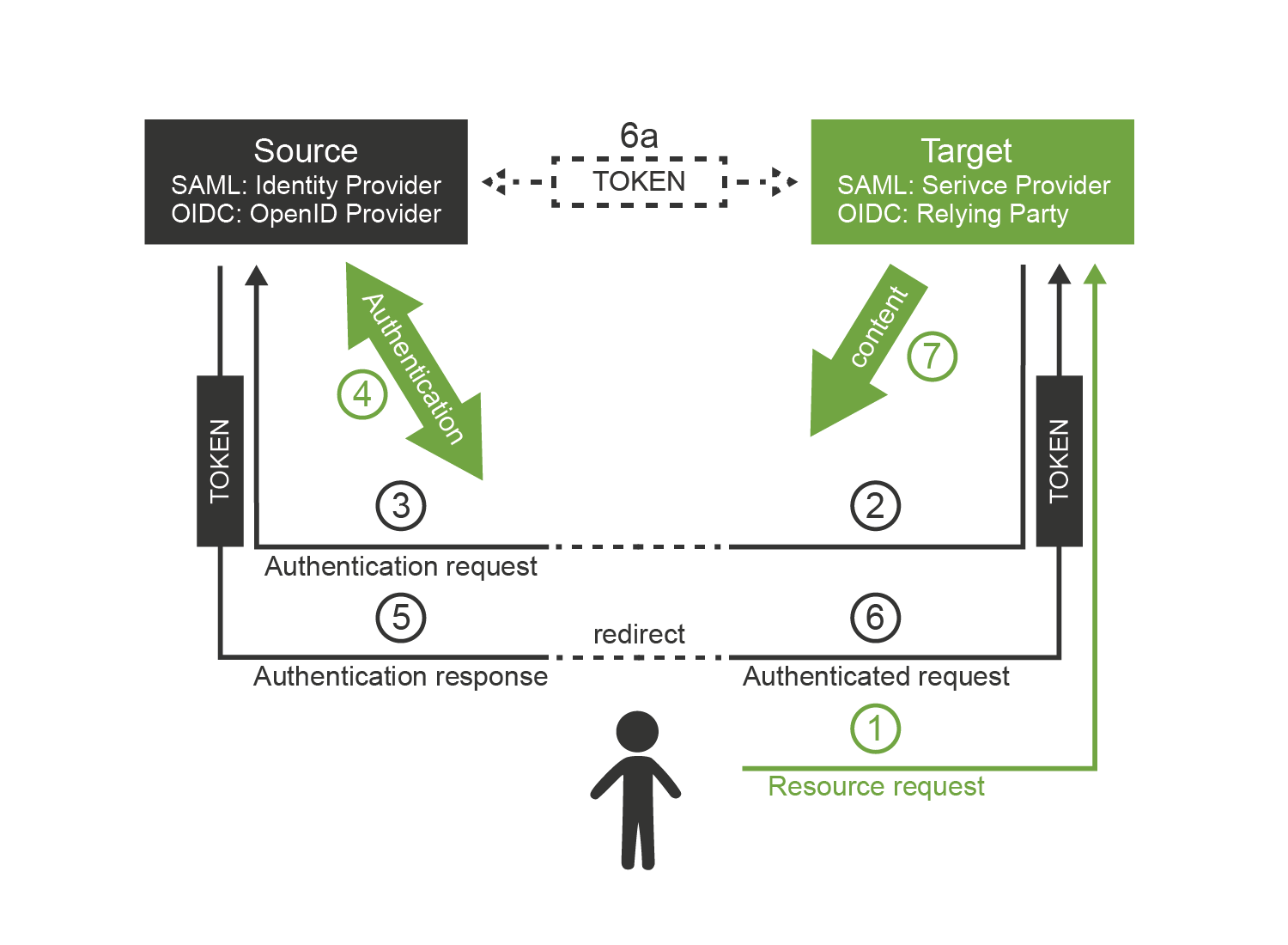

Single Sign-On (SSO) systems allow user to authenticate once, and access number of different systems re-using that authentication. There are many SSO systems for web applications, however it looks like these systems are all using the same basic principle of operation. The general access management flow is described below:

-

Application

Aredirects the user to the access management server (SSO server). -

The access management server authenticates the user.

-

The access management server establishes session (SSO session) with the user browser. This is crucial part of SSO mechanism.

-

User is redirected back to application

A. ApplicationAusually establishes a local session with the user. -

User interacts with application

A. -

When user tries to access application

B, it redirects user to the access management server. -

The access management server checks for existence of SSO session. As the user authenticated with the access management server before, there is a valid SSO session.

-

Access management server does not need to authenticate the user again, it immediately redirects user back to application

B. -

Application

Bestablishes a local session with the user and proceeds normally.

The user usually does not even realize that there were any redirects when accessing application B.

There is no interaction between the redirects, and the processing on the access management server is usually very fast.

It looks like the user was logged into the application B all the time.

Authorization in Access Management

The request of a user accessing an application is directly or indirectly passed through the access management server. Therefore, the access management server can analyze the request and evaluate whether the user request is authorized or not. That is a theory. Unfortunately, the situation is much more complicated in practice.

The AM server usually intercepts only the first request to access the application, because it would be a performance impact to intercept all the requests. After the first request, the application established a local session and proceeds with the operation without any communication with the AM server. Therefore, the AM server can only enforce authorization during the first request. This means it can only enforce a very coarse-grained authorization decisions. In practice, it usually means that the AM server can make only all-or-nothing authorization decisions: whether a particular user can access all parts of a particular application or that he cannot access the application at all. The AM server usually cannot make any finer-grain decisions just by itself.

Some AM systems provide agents that can be deployed to applications, agents that enforce a finer-grain authorization decisions. Such agents often rely on HTTP communication. They are making decisions based on the URLs that the user is accessing. This approach might have worked well in the 1990s, but it has only very limited applicability in the age of single-page web applications and mobile applications. In such cases the authorization is usually applied to services rather than applications.

However, even applying the authorization to service front-ends does not solve the problem entirely. Sophisticated applications often need to make authorization decisions based on context, which is simply not available in the request or user profile at all. E.g. an e-banking application may allow or deny a transaction based on the sum of previous transactions that were made earlier that day. While it may be possible to synchronize all the authorization information into the user profile, it is usually not desirable. It would be a major burden to keep such information updated and consistent, not to mention security and privacy concerns. Many authorization schemes rely on a specific business logic, which is very difficult to centralize in an authorization server.

Then there are implementation constraints. In theory, the authorization system should make only allow/deny decisions. However, this is not enough to implement an efficient application. The application cannot afford to list all the objects in the database, pass them to authorization server, and then realize that the authorization server denied access to almost all of them. Authorization has to be processed before the search operation, and additional search filters have to be applied. Which means that authorization mechanisms need to be integrated deep into the application logic. This significantly limits the applicability of centralized authorization mechanisms.

AM systems often come with a promise to unify authorization across all the applications and to centralize management of organization-wide security policies. Unfortunately, such broad promises are seldom fulfilled. The AM system can theoretically evaluate and enforce some authorization statements. This may work well during demonstrations and even in very simple deployments. Yet in complex practical deployments, this capability is extremely limited. The vast majority of the authorization decisions is carried out by each individual application and is completely outside the reach of an AM system.

SAML and OpenID Connect

Some access management systems use proprietary protocols to communicate with the applications and agents. This is obviously an interoperability issue – especially when the AM principles are used in the Internet environment. Indeed, it is the Internet that motivated standardization in this field.

The first widespread standardized protocol in this field was Security Assertion Markup Language (SAML). The original intent of SAML was to allow cross-domain sign-on and identity data sharing across organizations on the Internet. SAML is both an access management protocol and a security token format. SAML is quite complex, heavily based on XML standards. Its specifications are long, divided into several profiles, there are many optional elements and features. Overall, SAML is not just a protocol, it is a set of very rich and flexible mechanisms.

Primary purpose of SAML is transfer of identity information between organizations. There are big SAML-based federations with hundreds of participating organizations. Many e-government solutions are based on SAML, there are big partner networks running on SAML, and overall it looks like SAML is a success. Yet, SAML was a victim of its own flexibility and complexity. The latest fashion trends are not very favorable to SAML. XML and SOAP-based web service mechanisms are hopelessly out of fashion, which impacts popularity of SAML. That has probably motivated the inception of other access management protocols.

The latest fashion still favors RESTful services and simpler architectural approaches. That probably contributed to the development of OpenID Connect protocol (OIDC). OpenID Connect is based on much simpler mechanisms than SAML, but it is reusing the same basic principles. OpenID connect has a very eventful history. It all started with a bunch of homebrew protocols such as LID or SXIP, that are mostly forgotten today. That was followed by the development of OpenID protocol, which was still very simple. OpenID gained some attention especially with providers of Internet services. Despite its simplicity, OpenID was not very well engineered, and it quickly reached its technological limits. It was obvious that OpenID needs to be significantly improved. At that time, there was almost unrelated protocol called OAuth, which was designed for management of cross-domain authorizations. That protocol was developed into something that was almost, but not quite, entirely unlike the original OAuth protocol. As the new protocol had almost nothing to do with the original OAuth protocol, it is perfectly understandable that it was dubbed OAuth2. In fact, OAuth2 is not really a protocol at all. It is rather a vaguely-defined framework to build other protocols. OAuth2 framework was used to build a cross-domain authentication and user profile protocol. This new protocol is much more similar to SAML than to the original OpenID, therefore it was an obvious choice to call it OpenID Connect. Some traditions are just worth maintaining.

Now there are two protocols that are using the same principle and doing almost the same thing: SAML and OpenID Connect. The principle of both protocols is illustrated in the following diagram.

The interaction goes like this:

-

User is accessing a resource. This can be web page or web application running on the target site.

-

Target site does not have a valid session for the user. Therefore, it redirects user browser to the source site. It adds authentication request into that redirect.

-

Browser follows the redirect to the source site. The source site gets the authentication request and parses it.

-

If the user is not already authenticated with the source site then the authentication happens now. The source site prompts for the username, password, certificate, one-time password or whatever credential that is required by the policy. With a bit of luck the authentication succeeds.

-

The source site redirects the browser back to the target site. The source site adds authentication response to the redirect. The most important part of the response is a token. The token directly or indirectly asserts user’s identity.

-

The target site parses the authentication response and processes the token. The token may be just a reference (e.g. SAML artifact) pointing to real data, or it may be access key to another service that provides the data (OIDC UserInfo). In that case the target site makes another request (6a). This request is usually a direct one and does not use browser redirects. One way or another, the target site now has claims about user identity.

-

Target site evaluates the identity, processes authorizations and so on. A local session with the user is usually established at this point to skip the authentication redirects on the next request. The target site finally provides the content.

Following table compares the terminology and technologies used in SAML and OIDC worlds.

| SAML World | OpenID Connect World | |

|---|---|---|

Source site |

Identity Provider (IdP) |

Identity Provider (IDP) or OpenID Provider (OP) |

Target site |

Service Provider (SP) |

Relying Party (RP) |

Token reference |

SAML Assertion (or artifact) |

ID token, access token |

Token format |

SAML Token |

JSON Web Token (JWT) |

Intended for |

Web applications, web services (SOAP) |

Web applications, mobile applications, REST services |

Based on |

N/A |

OAuth2 |

Data representation |

XML |

JSON |

Cryptography framework |

XMLenc, XMLdsig |

JSON Object Signing and Encryption (JOSE) |

Careful reader surely noticed the similarity with the web-based access management mechanisms. That’s right. This is the same wheel, reinvented over and over again. However, to be completely honest, we have limited our description to cover flows intended for web browser only. Both SAML and OIDC has broader applicability that just web browser flows. The differences between the two protocols are much more obvious in these extended use cases. However, the web browser case nicely illustrates the principles and similarities of SAML, OpenID Connect and also the simple web-SSO systems.

Maybe the most important differences between SAML, OIDC and web-SSO (also known as Web Access Management or WAM) systems is the intended use:

-

SAML was designed for the web applications and SOAP web services world. It will handle centralized (single-IDP) scenarios very well, but it can also work in decentralized federations. Go for SAML if you are using SOAP and WS-Security or if you plan to build big decentralized federation. On second thought, you should probably forget about SAML anyway. It is not very fashionable these days.

-

OpenID Connect was designed mostly for use with social networks and similar Internet services. Its philosophy is still somehow centralized. It will work well if there is one strong identity provider and many relying parties. Technologically it will fit into RESTful world much better than SAML. Current fashion trends are favorable to OIDC.

-

Web-SSO/WAM systems are designed to be used inside a single organization. This is ideal to implement SSO between several customer-facing applications so the customers will have no idea that they interact with many applications and not just one. The web-SSO/WAM systems are not designed to work across organizational boundaries. Which is quite unfortunate in the current "cloudy" IT environment.

Although SAML and OIDC are designed primarily for cross-domain use, it is no big surprise to see them inside a single organization. There is a clear benefit in using an open standardized protocol instead of a proprietary mechanism. However, it has to be expected that the SSO system based on SAML or OIDC will have slightly more complicated setup than a simple Web-SSO/WAM system.

Kerberos, Enterprise SSO and Friends

Many of us would like to think that everything is based on web technologies today, and that non-web mechanisms are things of the past. Yet, there are still systems that are not web-based and where web-based SSO and AM mechanisms will not work. There are still some legacy applications, especially in the enterprise environment - applications based on rich clients or even character-based terminal interactions. Then there are network operating systems such as Windows and numerous UNIX variants and there are network access technologies such as VPN or 802.1X. There are still many cases where web-based access management and SSO simply won’t work. These technologies usually pre-date the web. Honestly, the centralized authentication and single sign-on are not entirely new ideas. It is perhaps no big surprise that there are authentication and SSO solutions even for non-web applications.

The classic classroom example of non-web SSO system is Kerberos. The protocol originated at MIT in the 1980s. It is a single sign-on protocol for operating systems and rich clients based on symmetric cryptography. Even though it is a cryptographic protocol, it is not too complicated to understand, and it definitely withstood the test of time. It has been used to this day, especially for authentication and SSO of network operating systems. It is a part of Windows network domain, and it is often the preferred solution for authentication of UNIX servers. The most serious limitation of Kerberos is given by its use of symmetric cryptography. The weakness of symmetric cryptography is key management. Kerberos key management can be quite difficult especially when Kerberos realm gets very big. Key management is also one of the reasons why it is not very realistic to use Kerberos in cross-domain scenarios. However inside a closed organization, Kerberos is still a very useful solution.

The major drawback in using Kerberos is that every application and client needs to be "kerberized". In other words everybody that wants to take part in Kerberos authentication needs to have Kerberos support in one’s software. There are kerberized versions of many network utilities so this is usually not a problem for UNIX-based networks. However, it is a big problem for generic applications. There is some support for Kerberos in common web browsers which is often referred to as "SPNEGO". However, this support is usually limited to interoperability with Windows domains. Even though Kerberos is still useful for operating system SSO, it is not a generic solution for all applications.

Many network devices use RADIUS protocol for what network engineers call "Authentication, Authorization and Accounting" (AAA). However, RADIUS is a back-end protocol. It does not take care of client interactions. The goal of RADIUS is that the network device (e.g. WiFi access point, router or VPN gateway) can validate user credentials that it has received as part of other protocol. The client connecting to VPN or WiFi network does not know anything about RADIUS. Therefore, RADIUS is similar to the LDAP protocol, and it is not really an access management technology.

Obviously there is no simple and elegant solution that can provide SSO for all enterprise applications. Despite that one technology appeared in the 1990s and early 2000s and promised to deliver universal enterprise SSO solution. It was called "Enterprise Single Sign-On" (ESSO). The ESSO approach was to use agents installed on every client device. The agent detects when login dialog appears on the screen, fills in the username and password and submits the dialog. If the agent is fast enough the user does not even notice the dialog and this creates the impression of Single Sign-On. However, there are obvious drawbacks. The agents need to know all the passwords in a cleartext form. There are ESSO variations with passwords randomly generated or even single-user passwords which partially alleviates this problem. In that case there is an additional drawback that the ESSO also needs to be integrated with password management of all the applications, which is not entirely easy. However, the most serious drawback of ESSO are the agents. These only work on workstations that are strictly controlled by the enterprise. Yet the world is different now, enterprise perimeter has efficiently disappeared, and the enterprise cannot really control all the client devices. Therefore, also ESSO is now mostly a thing of the past.

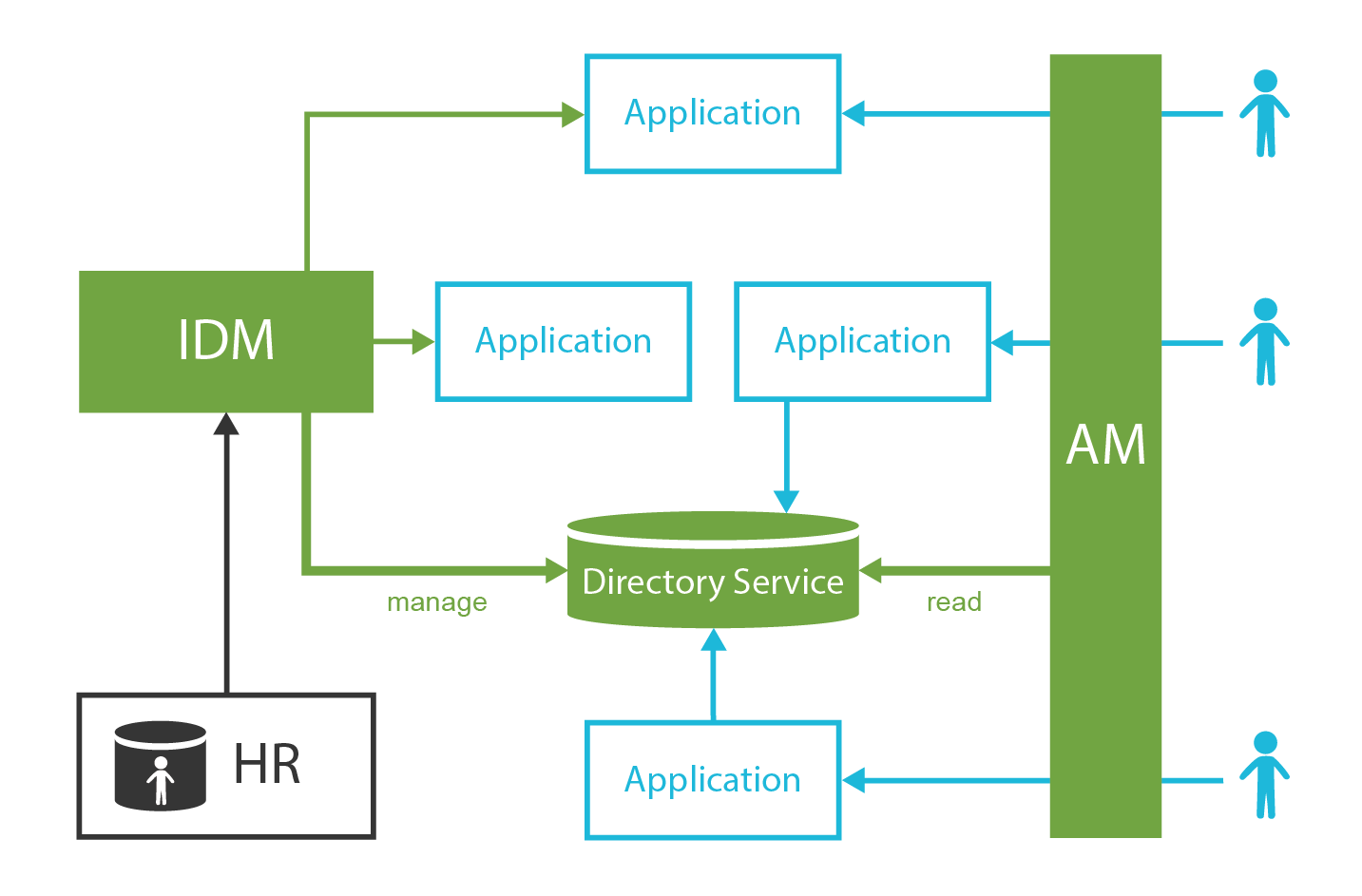

Access Management and the Data

Access Management servers and identity providers need to know the data about users to work properly. However, it is quite complicated. The purpose of access management systems is to manage access of users to the applications. Which usually means processing authentication, authorization (partially), auditing of the access and so on. For this to work, the AM system needs access to the database where the user data are stored. It needs access to usernames, passwords and other credentials, authorization policies, attributes and so on. The AM systems usually do not store these data themselves. They rely on external data stores. In most cases, these data stores are directory services or noSQL databases. This is an obvious choice: these databases are lightweight, highly available and extremely scalable. The AM system usually need just simple attributes, therefore the limited capabilities of directories and NoSQL databases are not a limiting factor here. Marriage of access management and lightweight database is an obvious and very smart match.

However, there is one critical issue – especially if the AM system is also used as a single sign-on server. The data in the directory service and the data in the applications must be consistent. E.g. it is a huge problem if one user has different usernames in several applications. Which username should he use to log in? Which username should be sent to the applications? There are ways how to handle such situations, but this is usually very cumbersome and expensive. It is much easier to unify the data before the AM system is deployed.

Even though the "M" in AM stands for "management", typical AM system has only a very limited data management capabilities. The AM systems usually assume that the underlying user database is already properly managed. E.g. a typical AM system has only a very minimalistic user interface to create, modify and delete user records. Some AM systems may have self-service functionality (such as password reset), but even that functionality is usually very limited. Even though the AM relies on the fact that the data in the AM directory service and the data in applications are consistent, there is usually no way how to fully synchronize the data by using the AM system itself. There may be methods for on-demand or opportunistic data updates, e.g. creating user record in the database when the user logs in for the first time. However, there are usually no solutions for deleting the records or for updating the records of inactive users.

Therefore, the AM systems are usually not deployed alone. The underlying directory service or NoSQL database is almost always a hard requirement for even humblest AM functionality. However, for the AM system to really work properly, it needs something to manage and synchronize the data. Identity Management (IDM) system is usually used for that purpose. In fact, it is usually strongly recommended to deploy directory and IDM system before the AM system. The AM system cannot work without the data. If the AM tries working with data that are not maintained properly, it will not take a long time until it fails.

Advantages and Disadvantages of Access Management Systems

Access management systems have significant advantages. Most of the characteristics are given by the AM principle of centralized authentication. As the authentication is carried out by a central access management server, it can be easily controlled and audited. Such centralization can be used to consistently apply authentication policies - and to easily change them when needed. It also allows better utilization of an investment into authentication technologies. E.g. multi-factor or adaptive authentication can be quite expensive if it has to be implemented by every application. When it is implemented in the AM server, it is re-used by all the applications without additional investment.

However, there are also drawbacks. As the access management is centralized, it is obviously a single point of failure. Nobody is going to log in when the AM server fails. This obviously means major impact on functionality of all applications. Therefore, AM servers need to be highly available and scalable. Which is not always an easy task. The AM servers need a very careful sizing, as they may easily become a performance bottlenecks.

However, perhaps the most severe drawback is the total cost of access management solution. The cost of the AM server itself is usually not a major issue. However, the server will not work just by itself. The server needs to be integrated with every application. Even though there are standard protocols, the integration is far from being straightforward. Support for AM standards and protocols in the applications is still not universal. Especially older enterprise applications need to be modified to switch their authentication subsystem to the AM server. This is often so costly that the adoption of AM technologies is often limited just to a handful of enterprise applications. Although recent applications usually have some support for AM protocols, setting it up is still not an easy task. There are subtle incompatibilities and treacherous details, especially if the integration goes beyond mere authentication into authorization and user profile management.

Even though many organizations are planning deployment of an AM system as their first step in the IAM project, this approach seldom succeeds. Projects usually plans to integrate 50-80% applications into the AM solution. However, the reality is that only a handful of applications can be easily integrated with the AM system. The rest of the applications is integrated using an identity management (IDM) system, which is hastily added to the project. Therefore, it is better to plan ahead: analyze the AM integration effort, prototype the deployment, and make a realistic plan for the AM solution. Make sure the AM can really bring the promised benefits. Starting with IDM and adding AM part later is often much more reasonable strategy.

Homogeneous Access Management Myth

There are at least two popular access management protocols for the web. There are huge identity federations based on SAML. Cloud services and social networks usually use OpenID Connect or its variations. There are variations and related protocols to be used for mobile applications and services. Then there are other SSO protocols, primarily focused on intra-organizational use. There is no single protocol or mechanism that can solve all the problems in the AM world.

Additionally, the redirection approach of AM systems assumes that the user has something that can display authentication prompts and carry out user interaction. Which is usually a web browser. Therefore, the original variant of access management mechanisms applies mostly to conventional web-based applications. Variations of this approach are also applicable to network services and single-page web applications. However, this approach is usually not directly applicable for applications that use rich clients, operating system authentication and similar "traditional" applications. Browser is not the primary environment that can be used to carry out the authentication in those cases. There are some solutions that usually rely on embedded browser, however that does not change the basic fact that the AM technologies are not entirely suitable for this environment. These applications usually rely on Kerberos as an SSO system or do not integrate with any SSO system at all.

Typical IT environment is composed of a wild mix of technologies and not all of them are entirely web-based. Therefore, it is quite unlikely a single AM system can apply to everything that is deployed in your organization. Authentication is very tightly bound to the user interaction, therefore it depends on the method how the user interacts with the application. As the user is using different technologies to interact with the web application, mobile application and operating system then it is obvious that also authentication and SSO methods for these systems will be different.

Therefore, it has to be expected that there will be several AM or SSO systems in the organization, each one serving its own technological island. Each island needs to be managed.

Practical Access Management

Unifying access management system, Single Sign-On, cross-domain identity federation, social login, universally-applicable 2-factor authentication – there are the things that people usually want when they think about Identity and Access Management (IAM). These are all perfectly valid requirements. However, everything has its cost. It is notoriously difficult to estimate the cost of access management solutions, because the majority of the cost is not in the AM software. Huge part of the total cost is hidden inside existing applications, services and clients. All of this has to be considered when planning an access management project.

Even though the AM is what people usually want, it is usually wise not to start with AM as the first step. AM deployment has many dependencies: unified user database, managed and continually synchronized data and applications that are flexible enough to be integrated are the very minimum. Unless your IT infrastructure is extremely homogeneous and simple, it is very unlikely that these dependencies are already satisfied. Therefore, it is almost certain that an AM project attempted at the beginning of the IAM program will not reach its goals. It is much more likely for such AM projects to fail miserably. On the other hand, if the AM project is properly scoped and planned and has realistic goals, there is high chance of success.

Perhaps the best way to evaluate an AM project is to ask several questions:

-

Do I really need access management for all applications? Do I need 100% coverage? Can I afford all the costs? Maybe it is enough to integrate just a couple of applications that are source of the worst pain. Do I know which applications are these? Do I know what my users really use during they workday? Do I know what they need?

-

What are the real security benefits of AM deployment? Will I be disabling the native authentication to the applications? Even for system administrators? What will I do in case of administration emergencies (e.g. system recovery)? Would system administrators still be able to circumvent the AM system? If yes then what is the real security benefit? If not then what will be the recovery procedure in case the AM system fails?

-

Do I really need SSO for older and rarely used applications? What is the real problem here? Is the problem that users are entering the password several times per day? Or is the real problem that they have to enter a different username or password to different applications, and they keep forgetting the credentials? Maybe simple data cleanup and password management will solve the worst problems, and I can save a huge amount of money on AM project?

The access management technologies are the most visible part of the IAM program. However, it is also the most expensive part, and the most difficult piece to set up and maintain. Therefore, do not underestimate other IAM technologies. Do not try to solve every problem with AM golden hammer. Using the right tool for the job is a good approach in every situation. In IAM program, it is absolutely critical for success.

Identity Management

Identity management (IDM) is maybe the most overlooked and underestimated technology in the whole identity and access management (IAM) field. Yet IDM is a crucial part of almost every IAM solution. It is IDM that can bring substantial benefits to almost any organization. So, what that mysterious IDM thing really is?

Identity management is exactly what the name says: it is all about managing identities. It is about the processes to create Active Directory accounts and mailboxes for a new employee. IDM sets up accounts for students at the beginning of each school year. IDM makes it possible to immediately disable all access to a suspicious user during a security incident. IDM takes care of adding new privileges and removing old privileges of users during reorganization. IDM makes sure all the accounts are properly disabled when the employee leaves the company. IDM automatically sets up privileges for students and staff appropriate for their classes. IDM records access privileges of temporary workers, partners, support engineers and all the third-party identities that are not maintained in your human resources (HR) system. IDM automates the processes of role request and approval. IDM records every change in user privileges in the audit trail. IDM governs the annual reviews of roles and access privileges. IDM makes sure the copies of user data that are kept in the applications are synchronized and properly managed. IDM makes sure data are managed according to data protection rules. IDM does many other things that are absolutely essential for every organization to operate in an efficient and secure manner.

It looks like IDM is the best thing since the sliced bread. So where’s the catch? Oh yes, there is a catch. At least, there was a catch. The IDM systems used to be expensive. Very expensive. The IDM systems used to be so expensive, it was very difficult to justify the cost even with such substantial and clear benefits. That time is over now. Identity management is still not cheap. However, the benefits clearly outweigh the costs now.

|

Terminology

The term identity management is often used for the whole identity and access management (IAM) field.

This is somehow confusing because technologies such as single sign-on or access management do not really manage the identities.

Such technologies manage the access to the applications.

Even directory servers do not exactly manage the identities.

Directory servers store the identities and provide access to them.

There is in fact one whole branch of technologies that manage identities.

Those systems are responsible for creating identities and maintaining them.

Those are sometimes referred to as identity provisioning, identity lifecycle management or identity administration systems.

However, given the current state of the technology such names are indeed an understatement.

Those systems can do much more than just provisioning or management of identity lifecycle.

Recently, the term Identity Governance and Administration (IGA) was introduced.

It is supposed to include identity management systems with identity governance capabilities.

We will refer to these systems simply as identity management (IDM) systems.

When we refer to the entire field that contains access management, directory services, identity management and governance we will use the term identity and access management (IAM).

|

History of Identity Management

Let’s start at the beginning. In the 1990s there was no technology that would be clearly identified as "identity management". Of course, all the problems above had existed almost since the beginning of modern computing. There had always been some solutions for those problems. Historically, most of that solutions were based on paperwork and scripting. That worked quite well - until the big system integration wave spread through the industry in the 1990s and 2000s. As data and processes in individual applications got integrated, the identity management problems became much more pronounced. Manual paper-based processes were just too slow for the age of information superhighways. The scripts were too difficult to maintain in the world where new application is deployed every couple of weeks. The identity integration effort naturally started with the state-of-the-art identity technology of the day: directory services. As we have already shown, the directories were not entirely ideal tools for the job. The directories did not work very well in environment where people though that LDAP is some kind of dangerous disease, where usernames and identifiers were assigned quite randomly and where every application insisted that the only authoritative data are those stored in its own database.

The integration problems motivated the inception of identity management technologies in early 2000s. Early IDM systems were just data synchronization engines that were somehow hard-coded to operate with users and accounts. Some simple role-based access control (RBAC) engines and administration interfaces were added a bit later. During mid-2000s there were several more-or-less complete IDM systems. This was the first generation of real IDM systems. These systems were able to synchronize identity data between applications and provide some basic management capabilities. Even such a simple functionality was a huge success at that time. The IDM systems could synchronize the data without any major modification of the applications, therefore they brought the integration cost to a reasonable level - at the application side. The problem was that the cost of the IDM systems themselves was quite high. These systems were still somehow crude, therefore the configuration and customization required a very specialized class of engineers. IDM engineers were almost exclusively employed by IDM vendors, big system integrators and expensive consulting companies. This made the deployment of IDM solutions prohibitively expensive for many mid-size and smaller organizations. Even big organizations often deployed IDM solution with quite limited features to make the cost acceptable.

Early IDM systems evolved and improved in time. There were companion products for identity governance and compliance that augmented the functionality. Yet, it is often almost impossible to change the original architecture of a product. Therefore, almost all the first-generation IDM products struggled with limitations of the early product design. Most of them do not exist today, or are considered to be legacy.

All these IDM systems were commercial closed-source software. However, the closed-source character of the IDM products is itself a huge problem. Every IDM solution has to be more-or-less customized - which usually means more rather than less. It has to be the IDM system that adapts, and not the applications. Requiring each application to adapt to a standardized IDM interface means a lot of changes in a lot of different places, platforms and languages. The total cost of all necessary modifications adds up to a huge number. Such approach is tried from time to time, it almost always fails. While there are many applications in the IT infrastructure, there is just one IDM system. If the IDM system adapts to applications and business processes, the changes are usually smaller, and they are all in one place, implemented in a single platform. The IDM system must be able to adapt. It has to adapt a great deal, and it has to adapt easily and rapidly. Closed-source software is notoriously bad at adapting to requirements that are difficult to predict. Which in practice means that the IDM projects based on first-generation products were hard to use, slow to adapt and expensive.

Even worse, the closed-source software is prone to vendor lock-in. Once the IDM system is deployed and integrated, it is extremely difficult to replace it with a competing system. The closed-source vendor is the only entity that can modify the system, and the system cannot be efficiently replaced. Which means that the end customer is not in a position to negotiate. Which means high maintenance costs. It naturally follows that the first generation of IDM systems was a huge commercial success. For the vendors, that is.

Then the 2000s were suddenly over, with an economic crash at the end. We can only speculate what were the reasons, but the fact is that around the years 2009-2011 several very interesting new IDM products appeared on the market. One interesting thing is that all of them were more-or-less open source. The benefit that open source character brings may be easy to overlook for business-oriented people. However, the benefits of open source in the identity management are almost impossible to overstate. As every single IDM engineer knows, understanding of the IDM product, and the ability to adapt the product, are two critical aspects of any IDM project. Open source is the best way to support both understanding and flexibility. There is also third important advantage: it is almost impossible to create a vendor lock-in situation with an open source product. All the open source products are backed by companies that offer professional support services that are equivalent to the services offered by commercial IDM products. This brings quality assurance for the products and related services. However, the companies does not really "own" the products, there is no way for them to abuse intellectual property rights against the customers. Open source brings new and revolutionary approach, both to technology and business.

New products appeared since early 2010s, in many areas in identity and access management. However, it is still a humble identity management platform that forms the core of the solution. The products have evolved to the point, that the entire field is not called identity governance and administration (IGA). New functionality was added to the products: identity governance, analytics, policy management, advanced reporting, and many more. New IGA platforms are much more powerful than ever, yet they are still the heart of IAM solutions.

What is This Identity Management, Anyway?

Identity management (IDM) is a simple term which encompasses a very rich and comprehensive functionality. It contains identity provisioning (and reprovisioning and deprovisioning), synchronization, organizational structure management, role-based access control, data consistency, approval processes, auditing and few dozens of other features. All of that is thoroughly blended and mixed with a pinch of scripting and other seasoning until there is a smooth IDM solution. Therefore, it is quite difficult to tell what identity management is just by using a dictionary-like definition. We would rather describe what identity management is by using a couple of typical usage scenarios.

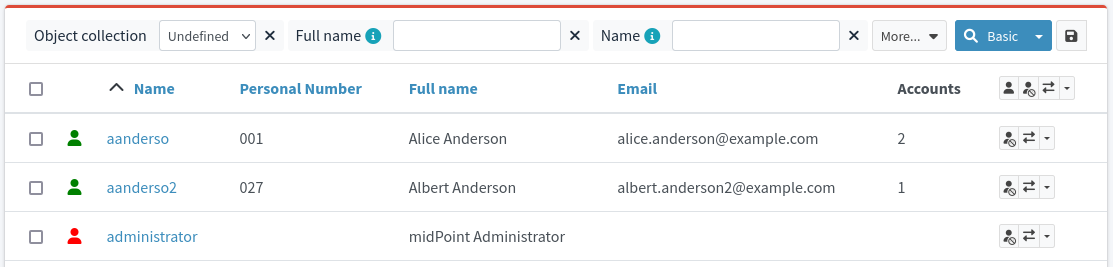

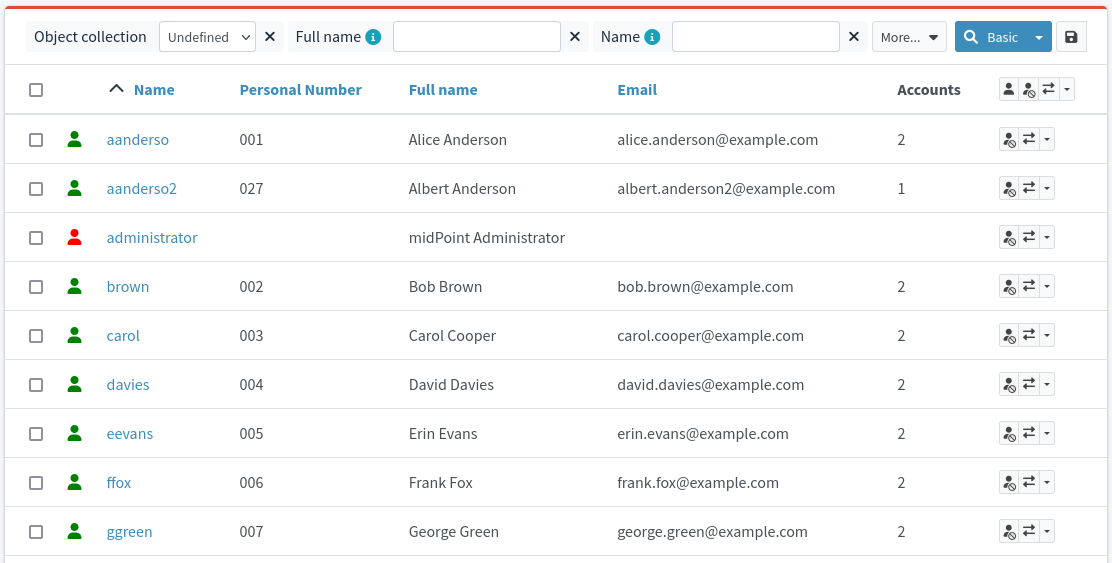

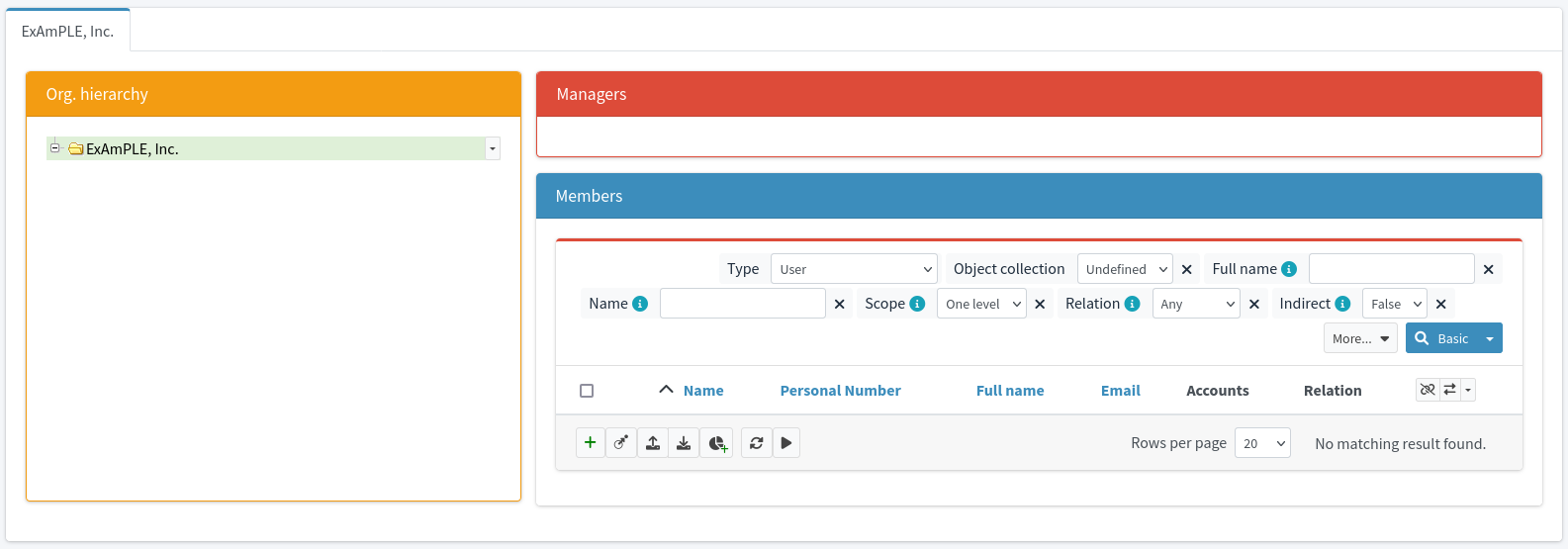

Let’s have a fictional company called ExAmPLE, Inc. This company has few thousand employees, decent partner network, customers and suppliers and all the other things as real-world companies have. And ExAmPLE company has an IDM system running in its IT infrastructure.

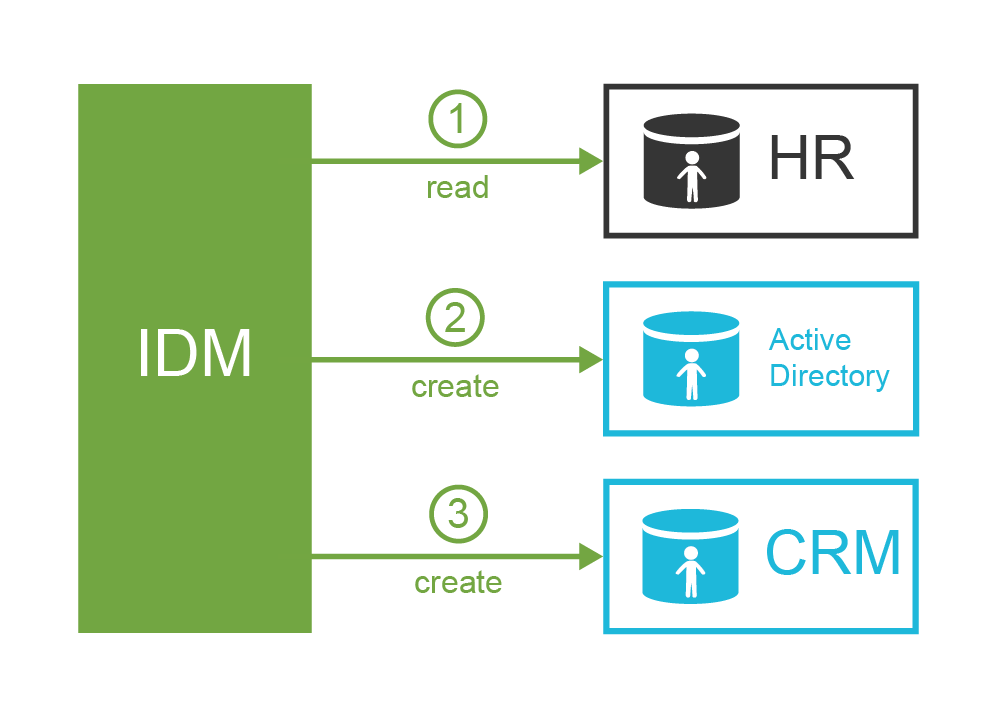

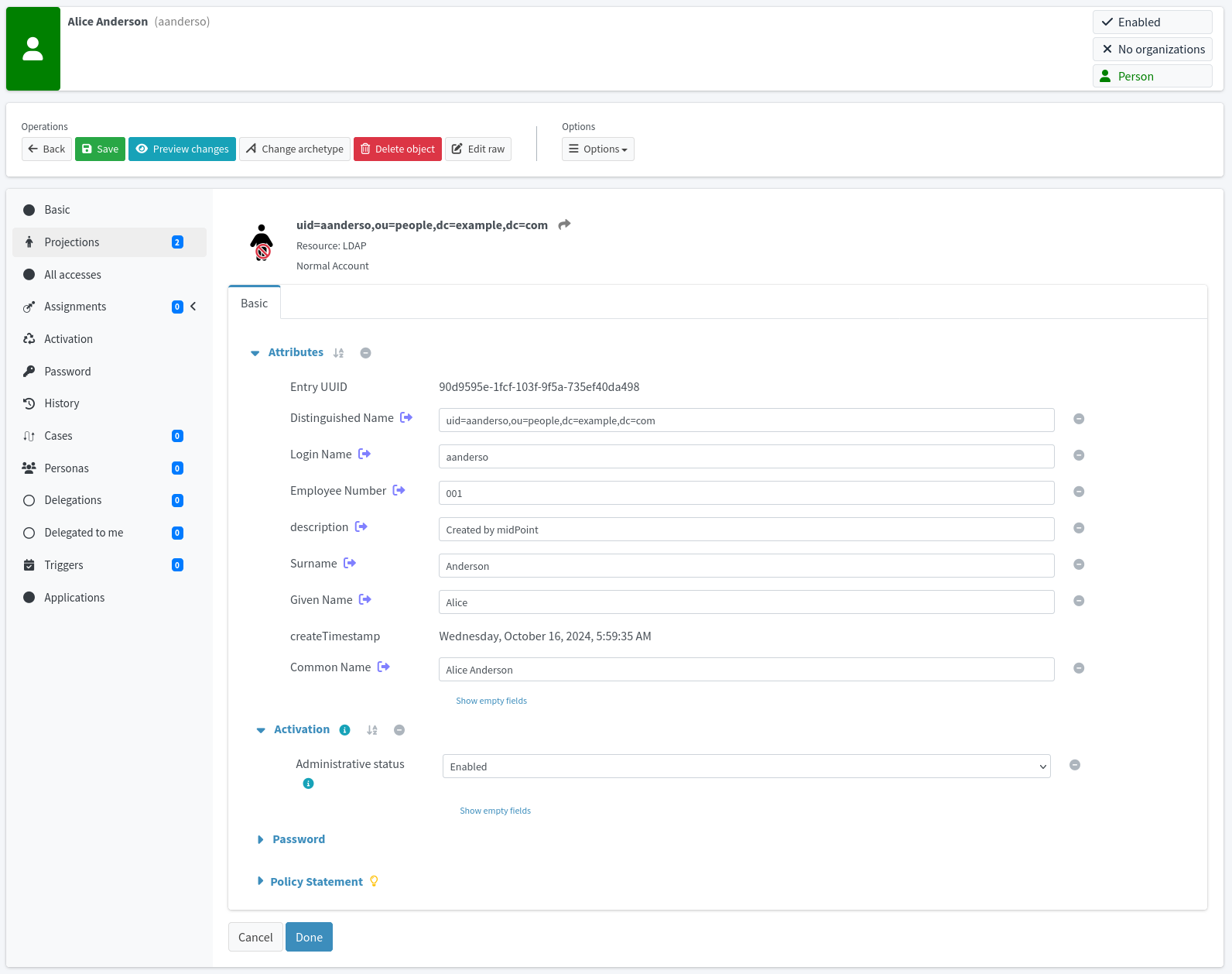

ExAmPLE hires a new employee, Alice.

Alice signs an employee contract few days before she starts her employment.

The contract is entered into the human resource (HR) system by the ExAmPLE HR staff.

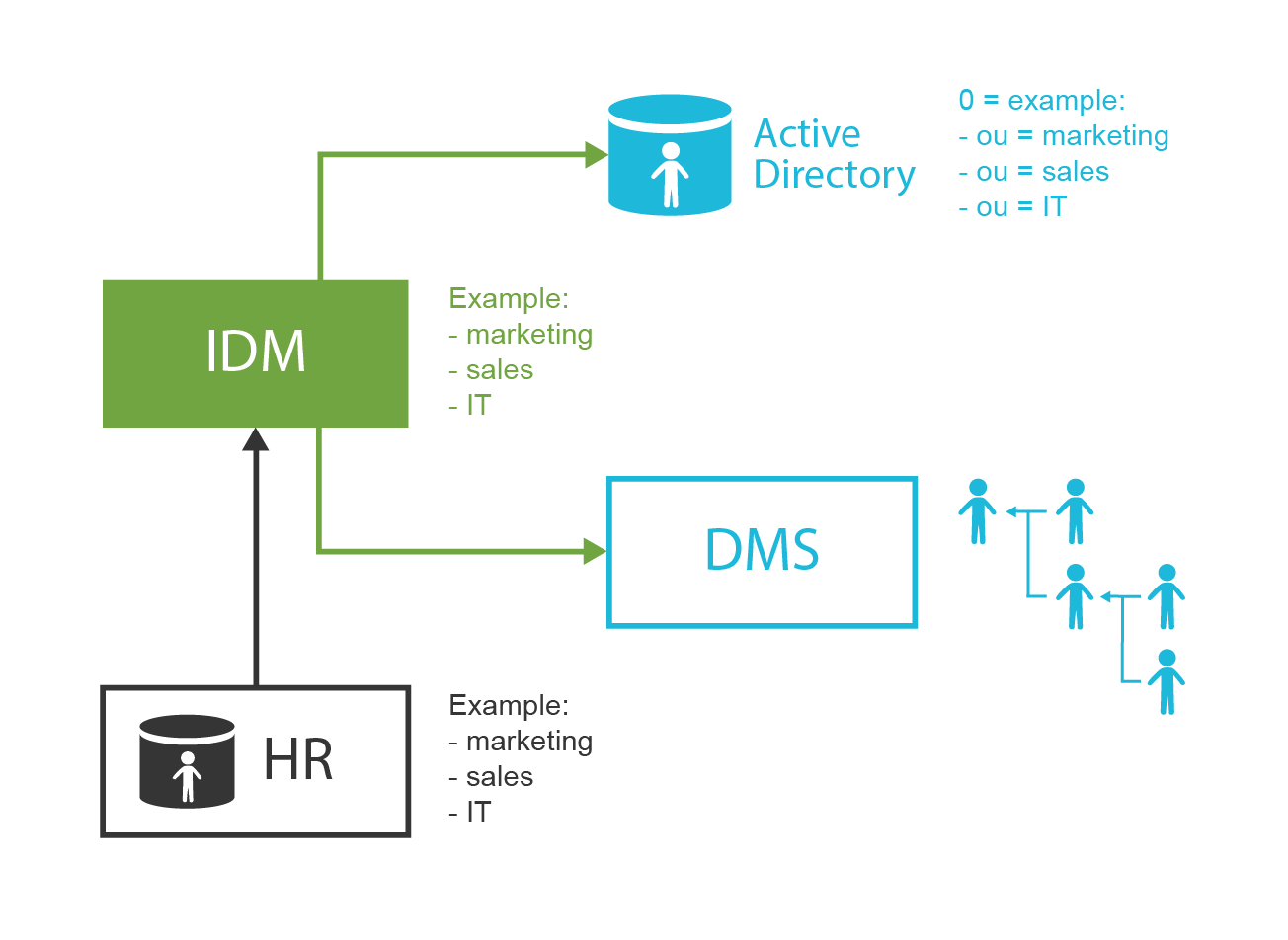

The IDM system periodically scans the HR records, it discovers the record of a new hire.

The IDM systems pulls in the record and analyzes it.

The IDM system takes user’s name and employee number from the HR record, it generates a unique username, and based on that information it creates a user record in the IDM system.

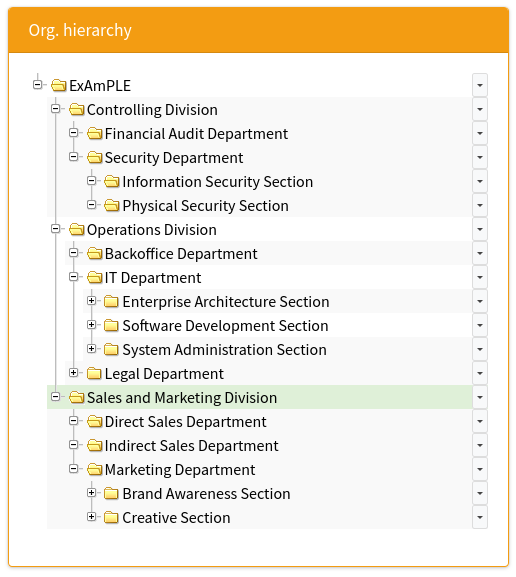

The IDM system also gets the organization code of 11001 from the HR record.

The IDM will look inside its organizational tree and discovers that the code 11001 belongs to sales department.

Therefore, IDM will automatically assign the user to the sales department.

The IDM will also process the work position code of S007 in the HR record.

The IDM policies say that the code S007 means sales agent and that anybody with that code should automatically receive Sales Agent role.

Therefore, the IDM assigns that role.

As Alice is a core employee, the IDM system automatically creates an Active Directory account for her together with company mailbox.

The account will be placed into the Sales Department organizational unit.

The Sales Agent role entitles the user to more privileges.

Therefore, the Active Directory account is automatically assigned to sales groups and distribution lists.

The role also gives access to the CRM system, therefore CRM account is also automatically created and assigned to appropriate groups.

All of that happens in a couple of seconds after the new HR record is detected.

It all happens automatically.

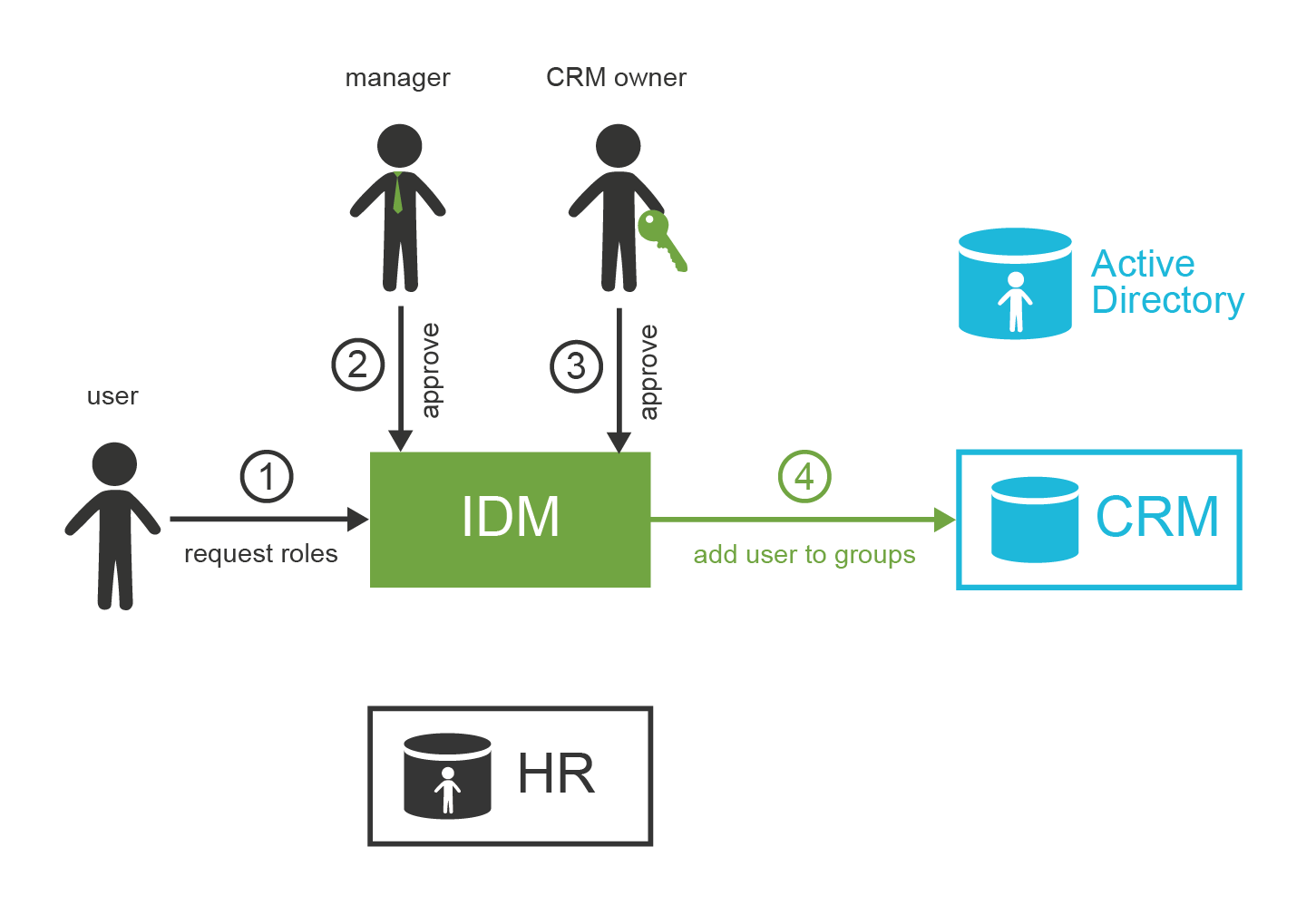

Alice starts her career, and she is a really efficient employee. Therefore, she gets more responsibilities. Alice is going to prepare specialized market analyses based on empirical data gathered in the field. ExAmPLE is an innovative company, always inventing new ways how to make business operations more efficient. Therefore, they invented this work position especially to take advantage of Alice’s skills. Which means there is no work position code for Alice’s new job. However, she needs new privileges in the CRM system to do her work efficiently. She needs that right now. Fortunately, the ExAmPLE has a flexible IDM system. Alice can log into the IDM system, select the privileges that she needs and request them. The request has to be approved by Alice’s manager and by the CRM system owner too. They get the notification about the request, and they can easily approve or reject it in the IDM system. Once the request is approved, Alice’s CRM account is automatically added to appropriate CRM groups. Alice may start working on her analysis minutes or hours after she has requested the privileges.

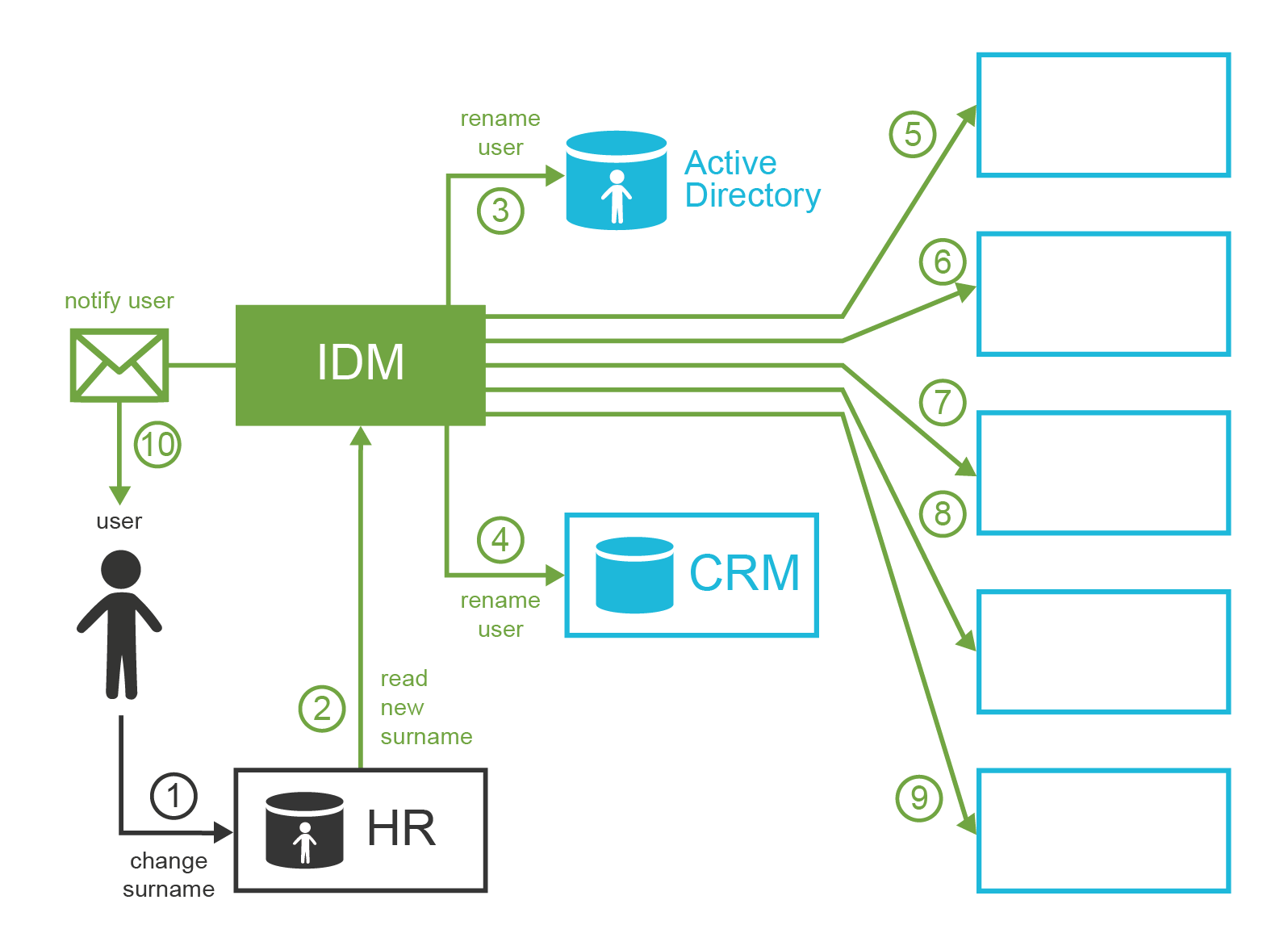

Alice lives happily ever after. One day she decides to get married. Alice, similarly to many other women, has the strange habit of changing her surname after the marriage. Alice has a really responsible work position now, she has accounts in a dozen information systems. This is no easy task to change her name in all of them, is it? In fact, it is very easy because ExAmPLE has its IDM system. Alice goes to the HR department, and the HR staff changes her surname in the HR system. The IDM system will pick up the change and propagate that to all the affected systems. Alice even automatically gets a new e-mail address with her new surname (keeping the old one as an alias). Alice receives a notification that now she can use her new e-mail address. The change is fast, clean and effortless.

Later that day Alice discovers that her password is about to expire. Changing the password in all the applications would be a huge task. Alice knows exactly what to do. She logs into the IDM system and changes her password there. The password change is automatically propagated to each affected system according to policy set up by the IT security office.

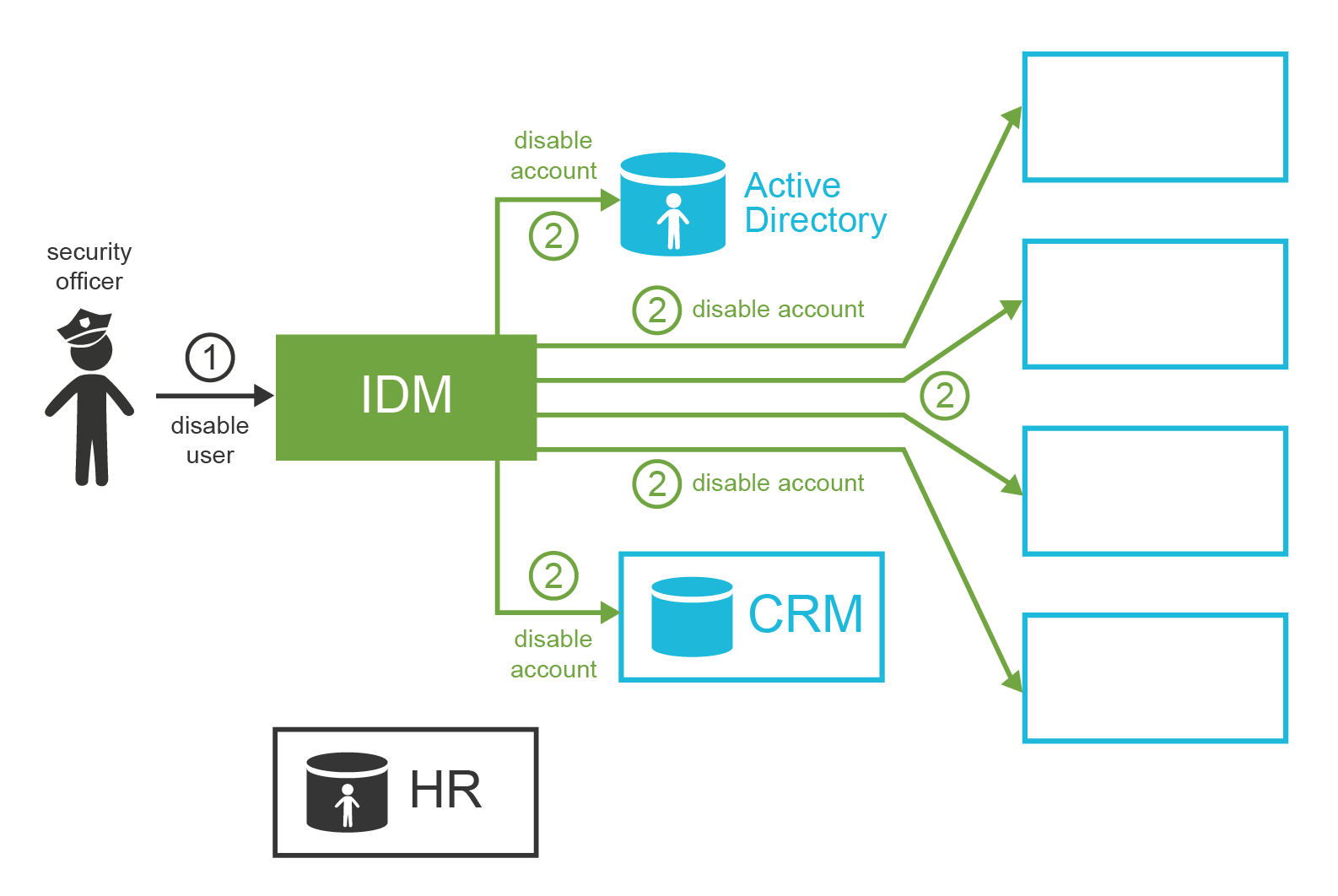

Something unexpected happens the following month. There is a security incident. The security office discovered the incident, and they are investigating it. It looks like it was an insider job. The security officers are using the data from the IDM system to focus their investigation on users that had privileges to access affected information assets. They pinpoint Mallory as a prime suspect. The interesting thing is that Mallory should not have such powerful privileges at all. Luckily, the IDM system also keeps an audit trail about every privilege change. Therefore, security team discovers that it was Mallory’s colleague Oscar that assigned these privileges to Mallory. Both men are to be interviewed. As this incident affects sensitive assets, there are some preventive measures to be executed before any word about the incident spreads. The security officers use the IDM system to immediately disable all the accounts that Mallory and Oscar have. It takes just a few seconds for IDM to disable these accounts in all the affected applications.

Later, the investigation reveals that Oscar is mostly innocent. Mallory misused Oscar’s trust and tricked him to assign these extra privileges. Mallory abused the privileges to get sensitive data which he tried to sell. The decision is that Mallory has to immediately leave the company while Oscar may stay. However, as Oscar has shown poor judgment in this case, his responsibilities are reduced. The IDM system is used to permanently disable all Mallory’s accounts, re-enable Oscar’s accounts, and also to revoke powerful privileges that are considered too risky for Oscar to have.

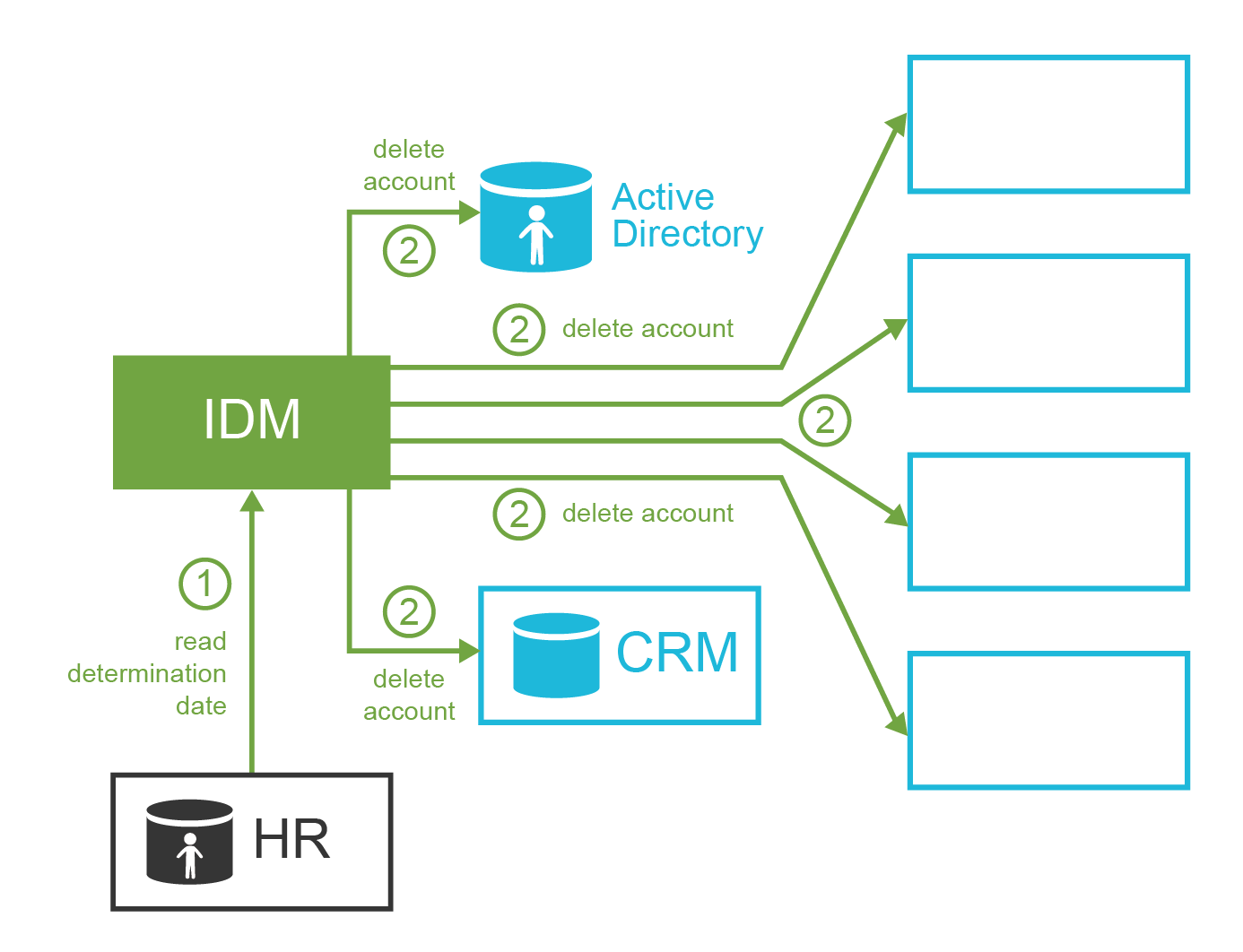

Few months later, Oscar is still ashamed because of his failure. He decides not to prolong his employee contract with ExAmPLE, and to leave the company without causing any more trouble. Oscar’s contract expires at the end of the month. This date is recorded in the HR system, and the IDM system takes it from there. Therefore, at midnight of the last Oscar’s day at work, the IDM system automatically deletes all Oscar’s accounts. Oscar starts a new career as a barman in New York. He is very successful.

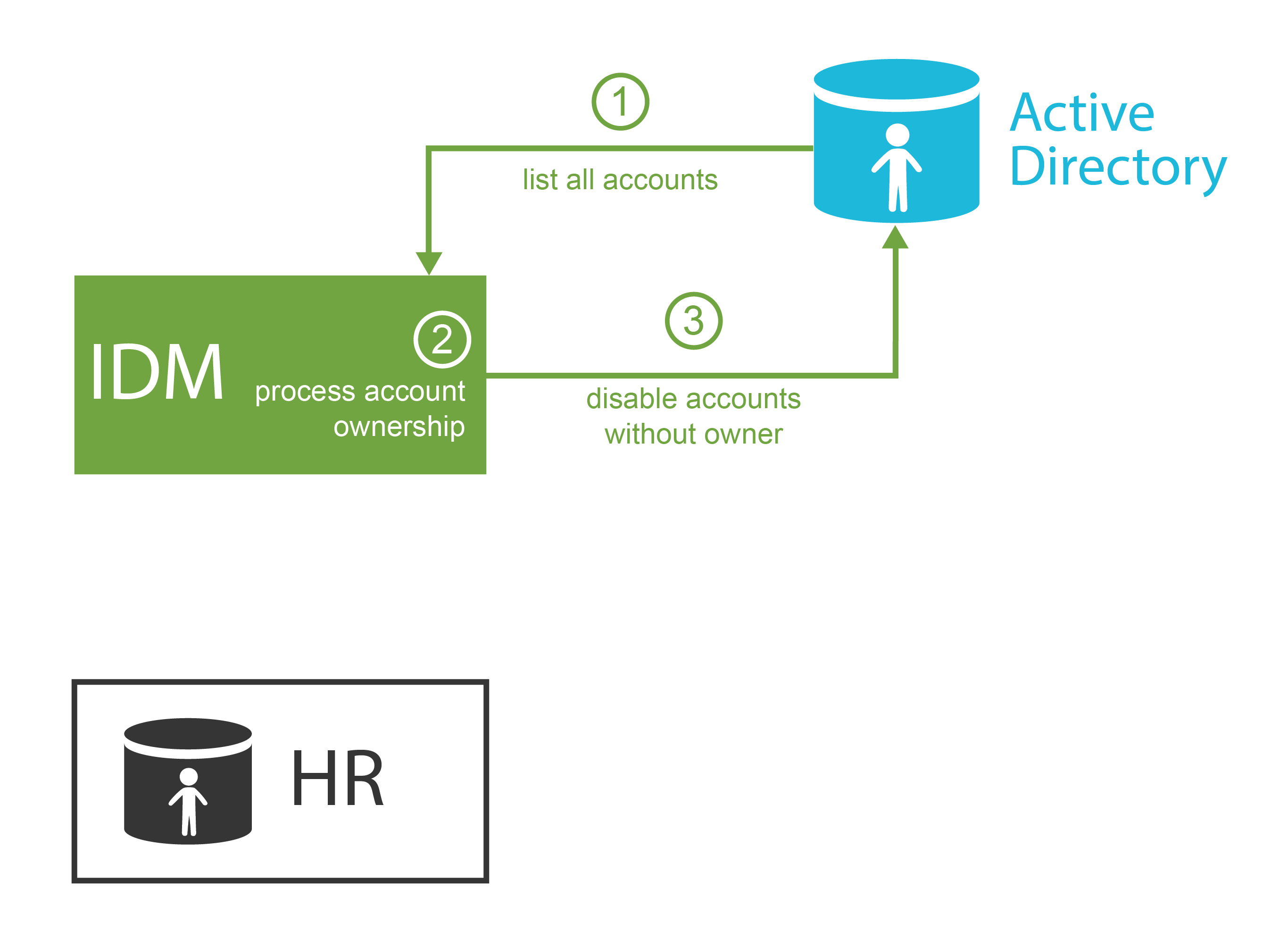

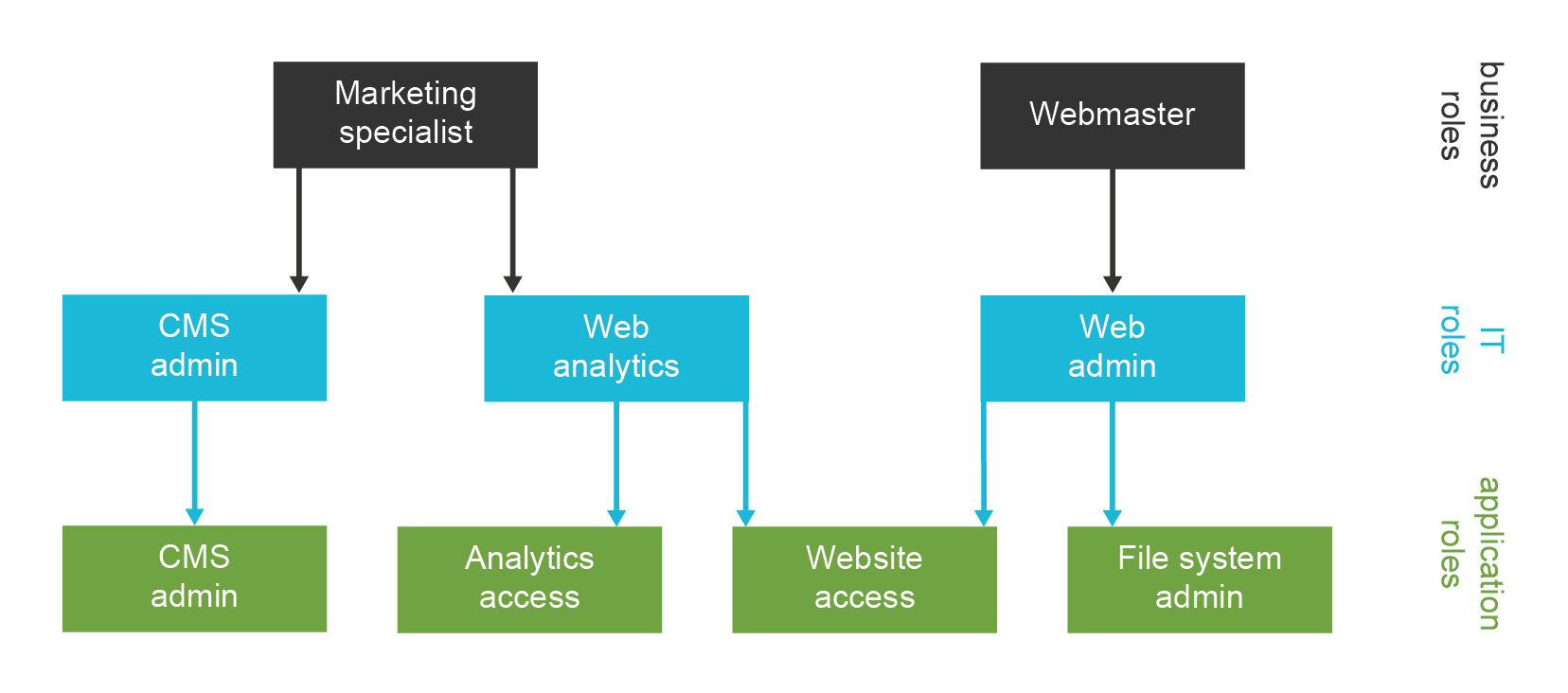

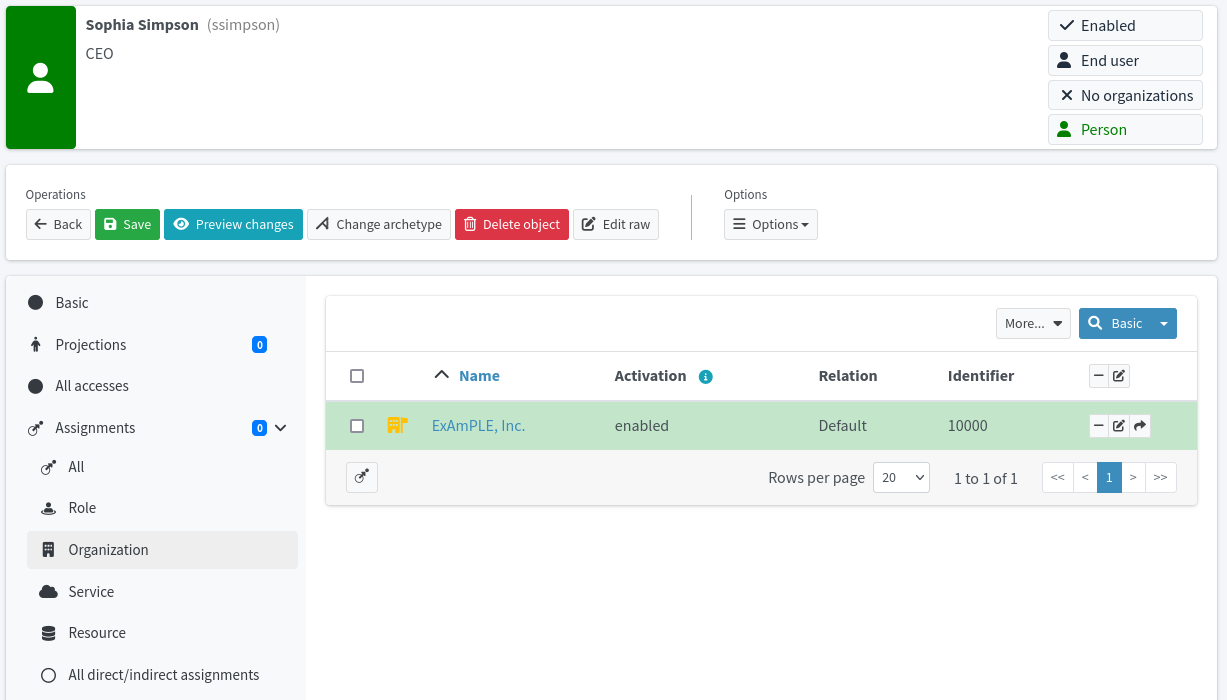

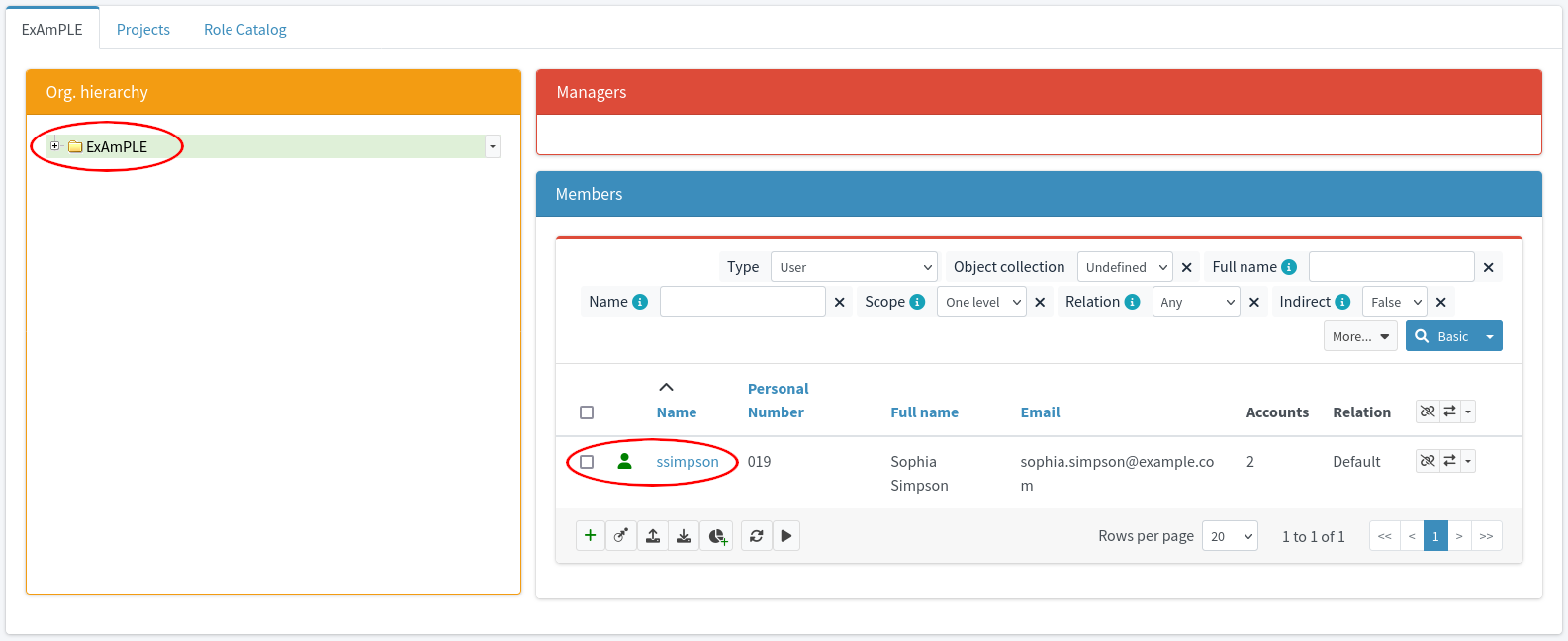

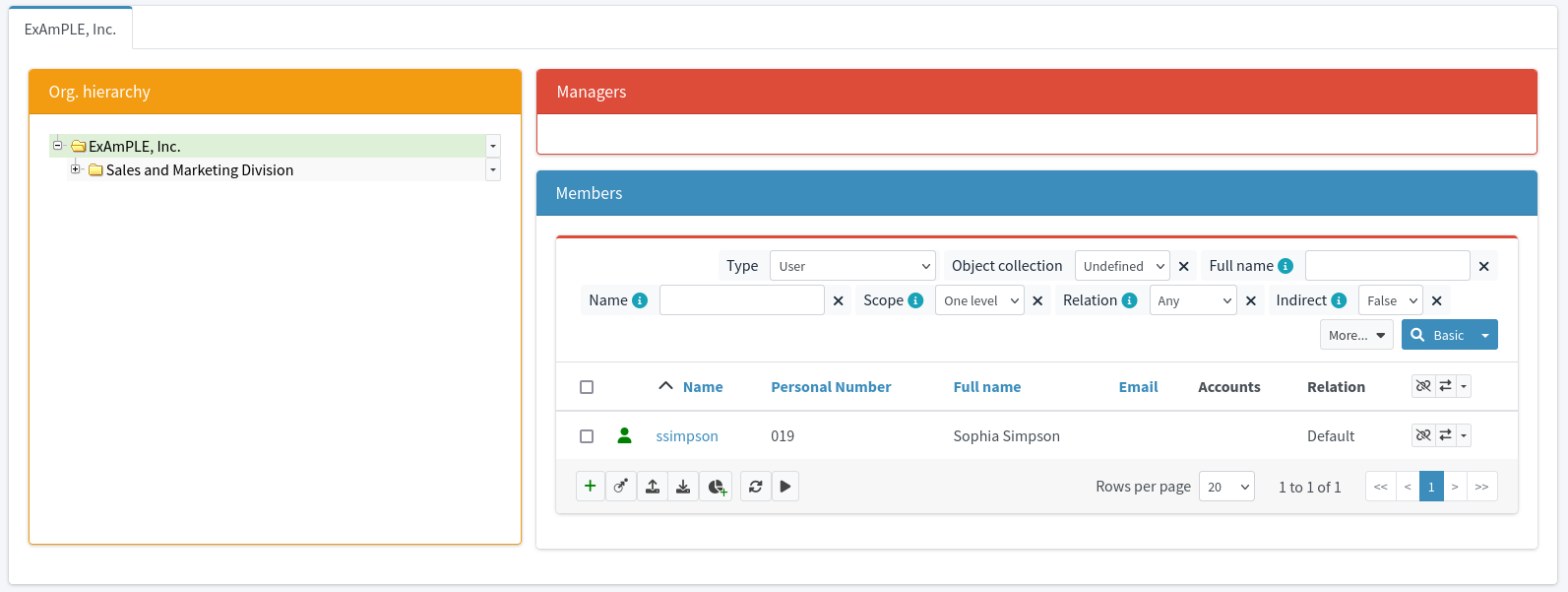

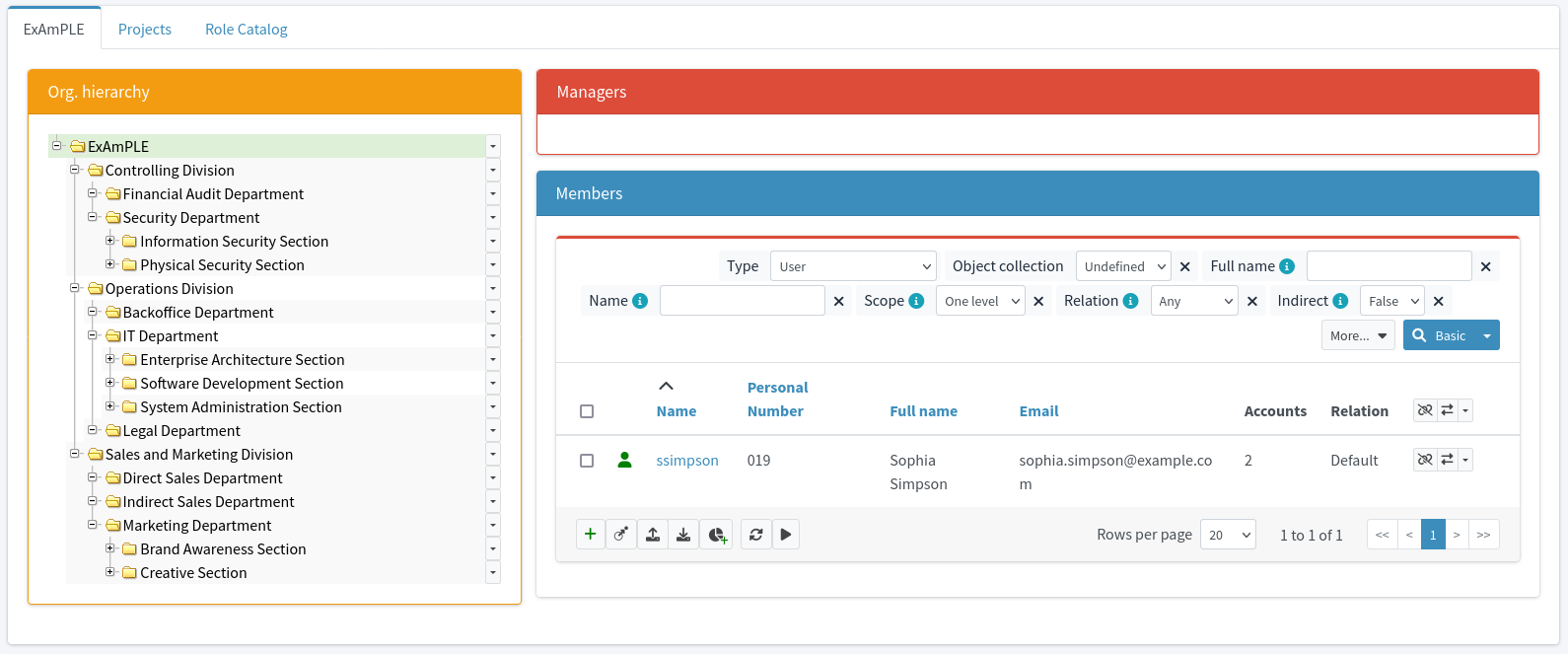

The security office has handled the security incident professionally, and the IDM system provided crucial data to make the security response quick and efficient. Security team receives praise from the board of directors. The team always tries to improve. They try to learn from the incident and reduce the possibility of such a thing happening again. The team is using data from the IDM system to analyze the privileges assigned to individual users. The usual job of the IDM system is to create and modify accounts in the applications. However, the IDM system is using bidirectional communication with the applications. Therefore, this analysis is far from being yet another pointless spreadsheet exercise. The analysis is based on real application data processed and unified by the IDM system: the real accounts, to which user they belong, what roles they have, which groups they belong to and so on. The IDM system can detect accounts that do not have any obvious owner. The analysis discovers quite a rich collection of testing accounts that were obviously used during the last data center outage half a year ago. The IT operations staff obviously forgot about these accounts after the outage. The security staff disables the accounts using the IDM tools and sets up an automated process to watch out for such accounts in the future.